Nvidia claims more AI from fewer chips is good for business.

China’s new DeepSeek R1 language model has been shaking things up by reportedly matching or even beating the performance of established rivals including OpenAI while using far fewer GPUs. Nvidia’s response? R1 is “excellent” news that proves the need for even more of its AI-accelerating chips.

If you’re thinking the math doesn’t immediately add up, the stock market agrees, what with $600 billion being wiped off Nvidia’s share price this week.

So, let’s consider a few facts for a moment. Reuters reports that DeepSeek’s development entailed 2,000 of Nvidia’s H800 GPUs and a training budget of just $6 million, while CNBC claims that R1 “outperforms” the best LLMs from the likes of OpenAI and others.

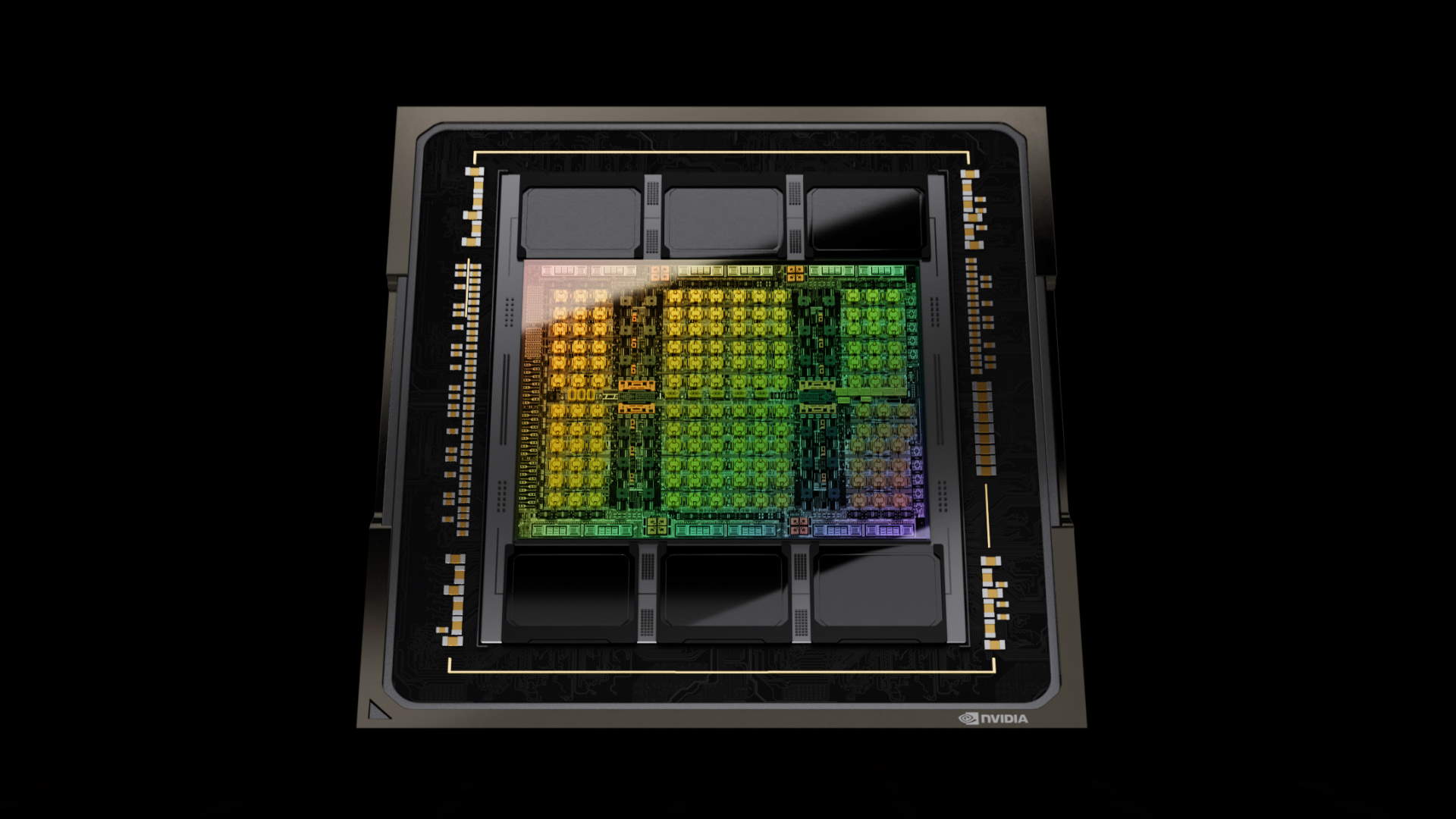

The H800 is a special variant of Nvidia’s Hopper H100 GPU that’s been hobbled to fit within the US’s export restriction rules for AI chips. Some in the AI industry claim that China generally and DeepSeek, in particular, have managed to dodge the export rules and acquire large numbers of Nvidia’s more powerful H100 GPUs, but Nvidia has denied that claim.

Meanwhile, it’s thought OpenAI used 25,000 of Nvidia’s previous-gen A100 chips to train ChatGPT 4. It’s hard to compare the A100 to the H800 directly, but it certainly seems like DeepSeek got more done with fewer GPUs.

That’s why the market got the yips when it comes to Nvidia. Maybe we don’t need quite as many chips for the AI revolution as was once thought?

Needless to say, Nvidia doesn’t see it that way, lauding R1 for demonstrating how the so-called “Test Time” scaling technique can help create more powerful AI models. “DeepSeek is an excellent AI advancement and a perfect example of Test Time Scaling,” the company told CNBC. “DeepSeek’s work illustrates how new models can be created using that technique, leveraging widely-available models and compute that is fully export control compliant.”

Of course, all may not be quite as it seems. As Andy reported yesterday, some observers think DeepSeek is actually spending more like $500 million to $1 billion a year. But the general consensus is that DeepSeek is getting more done for much less investment than the established players.

Microsoft, for instance, expects to spend $80 billion on AI hardware this year, with Meta saying it will unload $60 to $65 billion. DeepSeek’s R1 model seems to imply that similar results can be achieved for an order of magnitude less investment.

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

The question is, does that automatically mean fewer GPUs being bought? Perhaps not. At the kind of investment levels demonstrated by the likes of Microsoft and Meta, the number of organisations that can get involved is necessarily limited. It’s just too expensive.

Cut that by a tenth or more and suddenly the number of potential participants might explode. And they’ll all want GPUs. It’s a little like the move from mainframe to personal computing. Sure, each individual investment in computing was a lot smaller, but the computing business overall grew far larger.

So, maybe DeepSeek is an inflection point. From here on, AI development is more accessible, less dominated by a small number of hugely wealthy entities, and maybe even a bit more democratic.

Or maybe we’ll find out that DeepSeek has used a mountain of H100s after all. Either way, Nvidia will be planning to make its own mountain—of cash.