That's Nvidia's prediction. Mine is more profits.

I’m not usually one for extravagant tech and hardware predictions—things can change so much and so unpredictably that it’s difficult to put too much weight behind such premonitions. But when it comes to AI tech, things move so quickly that what seems distant might not actually be too far away. Combine this with Nvidia being the one outlining the futuristic vision, and I take it a little more seriously.

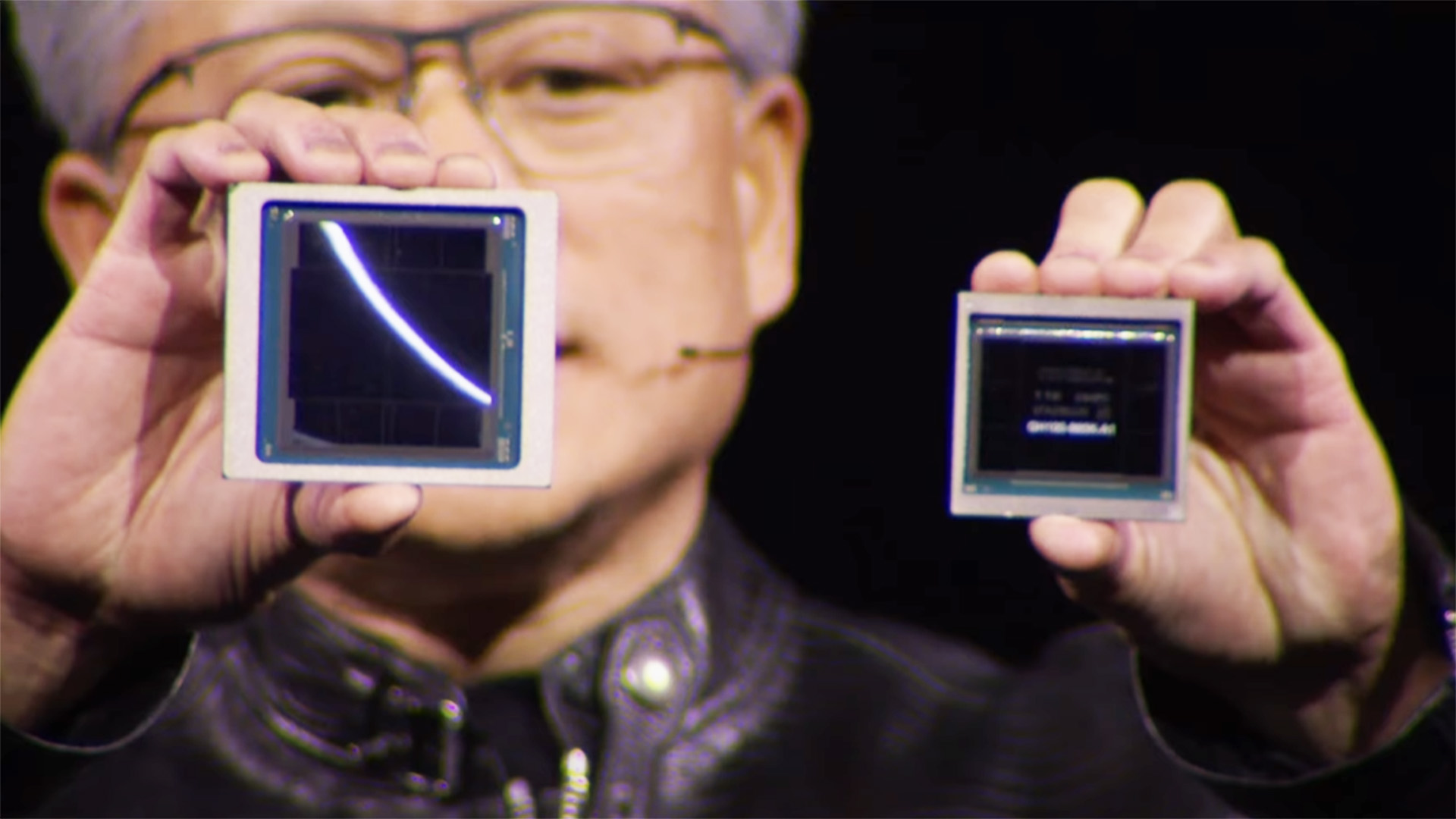

Nvidia’s latest prediction, as outlined at the IEDM 2024 conference according to Dr. Ian Cutress (via TechPowerUp), is AI accelerators that are 3D-stacked and that use—at least in part—silicon photonics for data transmission. This is, as Cutress puts it, Nvidia’s vision of “the future of AI compute”.

The image provided in the post shows an AI accelerator (ie, a datacentre GPU) that’s split vertically into a substrate, integrated silicon photonics, GPU tiers, 3D stacked DRAM, and cold plate.

The two big innovations in this picture, insofar as they could be applied to AI accelerators, are silicon photonics and the vertical stacking for logic. The former uses photons (light) to transmit data to and from optical components, which is faster and uses less power for more bandwidth than traditional electrical data transmission.

Judging by the diagram, it looks like this light-based transmission technology would be used horizontally to connect to other accelerators.

Here’s @NVIDIA’s vision of the future of AI compute.Silicon photonics interposerSiPh intrachip and interchip12 SiPh connects, 3 per GPU tile4 GPU tiles per tierGPU ‘tiers’ (GPU on GPU?!?)3D Stacked DRAM, 6 per tile, fine-grainedFrom #iedm24. My guess, 2028/2029/2030… pic.twitter.com/5IsDkYSWT2December 8, 2024

However, TechPowerUp says these accelerators feature “12 SiPh [silicon photonics] connections for intrachip and interchip connections, with three connections per GPU tile across four GPU tiles per tier”. And “intra-chip connection” would seem to imply connection between each of these tiles within each tier, too.

The diagram says there’s an electrical (not optical) interconnect from die-to-die and tier-to-tier, which would suggest it’s using more traditional Through-Silicon Via (TSV) tech to get the vertical stacking done.

Silicon photonics is still only in its infancy. It might make more sense for Nvidia to use Through-Silicon-Via (TSV) technology for the vertical dimension, which essentially involves creating tiny tunnels as pathways between the stacked chips. This is the technology that allows the AMD Ryzen 7 9800X3D, for example, to have its processor sitting on top of its cache.

Though usually we see 3D stacked chips limited to cache on logic, i.e. L3 cache on cores as per AMD’s chip, rather than logic on logic, which is suggested here.

It looks like four GPU tiles will exist per GPU “tier”, and these tile tiers will be stacked vertically, too. Then on top of all of that, stacked DRAM. That all sounds like it’d get extremely toasty, and it’s not something I’d expect to be achieveable in the immediate future.

Whatever the case, it’s certainly an interesting picture of what might be to come, and if anyone’s able to do it it’ll be Nvidia. And while we shouldn’t infer too much about these technologies making their way across to gaming GPUs any time soon, it wouldn’t be unreasonable to assume some of it might, at some point.

If the technology’s there and gets implemented in AI accelerators, the cheaper aspects (such as TSV stacking) might be worth adding to the consumer GPU mix. Gaming graphics doesn’t require the kind of bandwidth that AI processing does, though, so I think we can remove photonics from the gaming equation for the foreseeable future.

And these combined technologies won’t even be possible for AI accelerators in the near future, either. I think Cutress is right: “My guess, 2028/2029/2030 minimum.”

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.