By the time games use this tech, you'll have a different graphics card.

We can surely all agree it’s a great pity that some otherwise decent modern GPUs are hobbled by a shortage of VRAM. I’m looking at you, Nvidia RTX 5060. So, what if there was a way to squeeze games into a much smaller memory footprint, without impacting image quality?

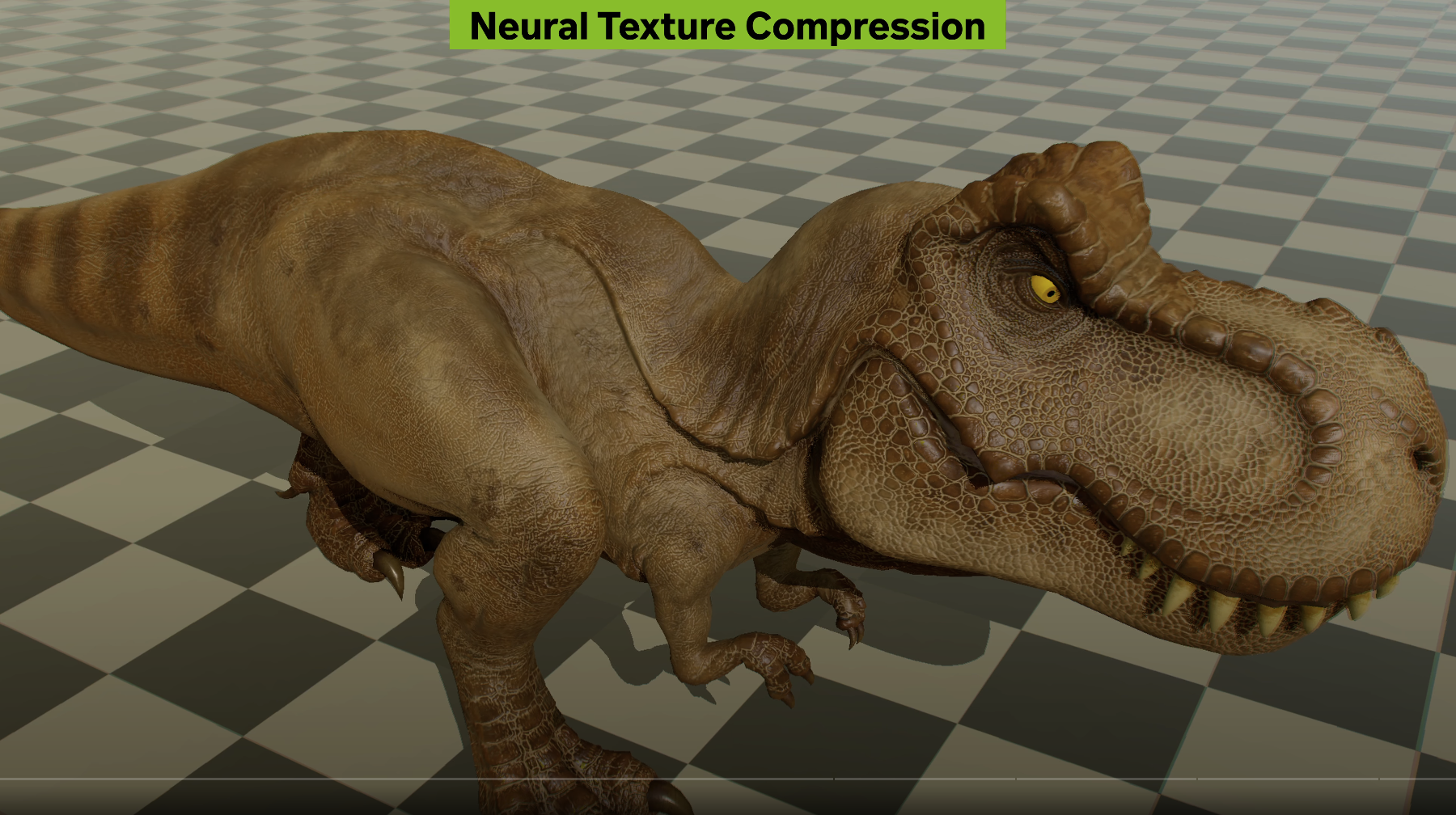

Actually, there is, at least in part, and it’s called neural texture compression (NTC). Indeed, the basic idea is nothing new, as we’ve been posting about NTC since the dark days of 2023.

Not to overly flex, but our own Nick also got there ages ago with the Nvidia demo, which he experimented with back in February. However, as ever with a novel 3D rendering technology, the caveat was a certain green-tinged proprietary aspect. Nvidia’s take on NTC is known as RTXNTC, and while it doesn’t require an Nvidia GPU, it really only runs well on RTX 40 and 50 series GPUs.

However, more recently, Intel has gotten in on the game with a more GPU-agnostic approach targeted at low-power GPUs that could open the technology out to a broader range of graphics cards. Now, both technologies have been combined in a video demo by Compusemble that shows off the benefits of each. And it’s pretty impressive stuff.

Roughly speaking, in both cases, the principles are the same, namely using AI to compress video game textures far more efficiently than conventional compression techniques. Actually, what NTC does is transform textures into a set of weights from which texture information can then be generated. But the basic idea is to maintain image quality while reducing memory size.

In the simplest terms, doing that gives you two options. Either you can dramatically improve image quality in a similar memory footprint. Or you can dramatically reduce the memory footprint of textures with minimal image quality loss.

The latter is particularly interesting for recent generations of relatively affordable graphics cards, many of which top out at 8 GB of VRAM, including the aforementioned RTX 5060 and its RTX 4060 predecessor.

In modern games, there are frequent occasions when those GPUs have sufficient raw GPU power to produce decent frame rates, but insufficient VRAM to house all the 3D assets. That in turn forces the GPU to pull data in from main system memory, and performance craters.

When Nick tested out the Nvidia demo, he found that the textures shrank in size by 96% at the cost of a 15% frame rate hit. The caveat to this is that he was using an RTX 4080 Super. Lower performance GPUs lack sufficient Tensor core performance to achieve such minimal frame-rate reductions, therefore requiring a different technique to maintain performance, which only shrinks the memory footprint by 64%. Well, I say only. That’s still a very large reduction.

Neural texture compression won’t shrink all game data. Nvidia says it works best “for materials with multiple channels.” That means not regular RGB textures, but materials with information about how it absorbs, reflects, emits, and refracts in the form of normal maps, opacity and occlusion. But that’s actually a hefty chunk of the data in modern games, and the obvious hope is that reducing that footprint could make the difference between a game that fits in VRAM and one that doesn’t.

Anyway, the YouTube video is a neat little demonstration of the potential benefits of NTC. The snag is that it’s not a new idea and here we are over two years from our first post on the subject and all we have to show for it are a couple of tech demos.

Supporting NTC will require game devs to add it to their arsenal. The point is that it isn’t something that could be added to, say, the graphics driver that can then be applied to legacy titles. So, even if game developers do begun to use it for new titles, the question is whether they’d bother to put the effort in to update existing games.

There’s also a chance that the gaming community might not take to NTC well, especially if it’s used in a way that impacts image quality. Given that it’s ultimately an AI tech that generates textures, it’s open to accusations of “fakeness”. In other words, here are some fake textures to go along with your fake frames.

In then end, it will all come down to image quality and timing. The demos in the YouTube video show how it can produce impressive results. The question is how long it will take game developers to adopt it and what video card you’ll have if and when they do.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.