Oh, and it's all Apple's fault. Well, possibly...

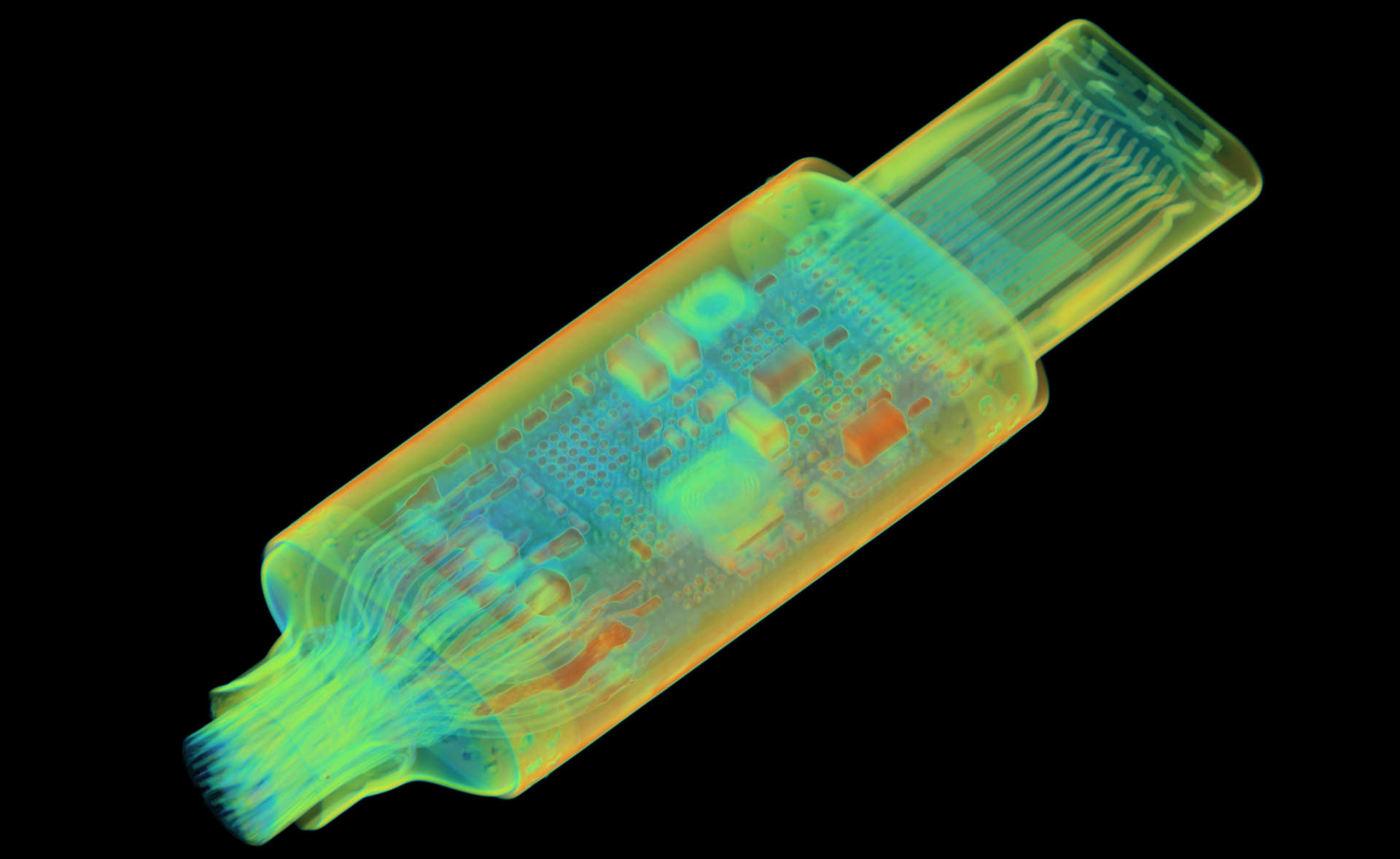

Question. What’s the difference between USB 3.1, USB 3.1 Gen 2, and USB 3.2 Gen 2? Answer, they’re all exactly the same, they’re just different iterations of the totally bananas naming schemes that have been cooked up for USB over recent years. But if you thought USB branding was a mess, well, you really don’t want to know about the catastrophic mess that are the underlying hardware complexities.

And guess what, it’s all Apple’s fault. Well, it’s partially Apple’s fault according to an anonymous USB engineer who gave a remarkably entertaining talk on the history of USB and the USB-C standard posing as a fox. Yes, really.

To be more specific, the talk was held on the DegenTech YouTube channel, self-described as, “a network of working professional furries interested in STEMM. We host open meets and presentations on VRchat and hangout on discord.” The talk was held by a DegenTech member going by the name of Temporel and with a history of USB engineering for various large outfits including Siemens.

According to Temporel, the answer to the question of of USB-C got so complicated and over-engineered is Apple. Kind of.

“If someone wants to hate on Apple, this is the time because partially this is Apple’s fault,” Temporel says. To cut a long and surprisingly fascinating story short, the problem comes down to charging.

The original USB-A standard was never intended to support device charging. It was about hooking up devices like keyboards and mice with very low power requirements, along with reliable data transfer.

And it was very good at that, enabling 480 MB/s of data over just two wires. Indeed, as Temporel says, USB Type-A did a stellar good job of killing off legacy interfaces like Firewire and parallel. “It was cheaper, more convenient. It was very scalable,” Temporel says.

But it didn’t support much by way of power output, just 0.5 A and 5 V. So, the problems all started in 2007 when Apple launched the iPhone and wanted to use USB to charge the device.

Apple cooked up its own out-of-spec USB interface with 2.4 A of charging power. That could damage a computer, so Apple devised a method where the iPhone would only charge at the higher speed when it detected a power supply as opposed to a standard USB port.

Pretty quickly, lots of other companies copied this approach, resulting in as many as 20 different standards based on USB but not actually compliant with the USB spec. In 2012, the USB standards body added support for 1.5 A, but this wasn’t enough.

The upshot was that all USB chargers and sockets ended up having integrated circuits and current sensing hardware that would figure out what the maximum charging capability was in any given device-and-charger scenario, but it was all outside the official USB spec.

China then implemented a standard that combined Apple’s charging standard with one from Huawei and made it compulsory. And since so many devices are made in China, that went a long way to creating a de facto standard for USB devices.

When USB Type-C came along, it was a chance to start anew. But the problem was then backwards compatibility. As Temporel explains, “all of the mayhem that is on the old spec is now added on top of the USB-C because you still have to support USB-A.

“You have standard USB. On top of that you have 15 independent standards from manufacturers. Then on top of that you have whatever six standards USB wanted to implement that didn’t help and just made it worse. That was slightly patch fixed by bloody China to force everyone to use at least two of them. And then on top of all this, they’re now going to start piling the USB-C protocols.”

Anyway, if you’ve ever wondered why something didn’t just work based on the assumption that USB is USB, now you know. USB is rarely, if ever, just USB. You can find out more from the full video, which is well worth a watch.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.