AI for your job, your storage, your factories, and your little robot too.

Yesterday, Nvidia’s CEO Jensen Huang addressed the crowd at the company’s global artificial intelligence conference. Naturally, the keynote had a strong focus on Nvidia’s future delving into AI technologies, including the current Blackwell processing architecture and beyond. Huang even touched on things like the use of AI in robotics, but with Nvidia stock prices continuing to drop, the market doesn’t seem too impressed.

For the first portion of the presentation, RTX Blackwell was hot on the CEO’s lips. We’ve just seen the launch of Nvidia’s new RTX 50-series cards running the new technology and DLSS 4 has been one of the few highlights. Between this and AMD’s FSR 4, the use of AI scaling to help improve games is going to be key in the coming generations. Huang made a point of noting the server-side version of Blackwell’s 40x increase in AI ‘factory’ performance over Nvidia’s own Hopper.

So while the company didn’t announce any gaming PCs, we did see two brand new Nvidia desktops shown off. DGX Spark (formerly DIGITS) and DGX Station are desktop computers designed specifically to run AI. These can be used to run large models on hardware designed precisely for the job. They’re not likely going to be a choice pick for your next rig, like the also enterprise focussed RTX Blackwell Pros that were announced, but you can register interest for the golden AI bois.

Huang also covered Nvidia’s new roadmap detailing the near future work regarding AI. As this is a developer aimed conference this is more about helping teams plan when working in coordination with Nvidia or being ready to use the company’s technologies. That being said, Nvidia is touting the next big leap with extreme scale-up capabilities with Rubin and Rubin Ultra.

Rubin is Nvidia’s upcoming AI ready architecture, and it’s not a bastardised spelling of the sandwich. Instead, the architecture is named after Vera Rubin, the astronomer who discovered dark matter. It is destined to introduce new designs for CPUs, and GPUs as well as memory systems.

“Rubin is 900x the performance of Hopper in scale-up FLOPS, setting the stage for the next era of AI.” says Huang, to a crowd who hopefully understood that.

It also comes with a turbo boosted version, Rubin Ultra. This is for huge projects and will be able to configure racks up to 600 kilowatts with over 2.5 million individual components per rack.

With both in place in the market, Nvidia hopes to be ready to face the greater demands AI will put on factories and processing, while still being scalable and energy efficient. According to the roadmap we should be seeing Rubin in play for Nvidia in 2026, and then after that we’ll have the Feynman architecture, named after physicist Richard Feynman, in 2027-28

It will likely work in tandem with Dynamo, another Nvidia AI enablement announced during the keynote. Dynamo, will be the successor to Nvidia’s Triton Inference Server, and critically is open source and available to all. The AI inference-serving software will help language models by interfacing between GPUs, separating the processing and reasoning tasks. It’s already doubled performance over Hopper, but it leads to a more interesting way to handle these tasks.

Dynamo stores what’s been done, and will begin allocating tasks to GPUs that already have information that might help. These GPUs will become more efficient at these tasks thanks to this. Honestly, this sounds a lot like how a real brain works. The more you think and associate topics, the stronger those links will be and the better you can process ideas. But it’s not just limited to GPUs and CPUs, it’s also going to drastically change storage.

“Rather than a retrieval-based storage system, the storage system of the future is going to be a semantics-based retrieval system. A storage system that continuously embeds raw data into knowledge in the background, and later, when you access it, you won’t retrieve it—you’ll just talk to it. You’ll ask it questions and give it problems.” he explains.

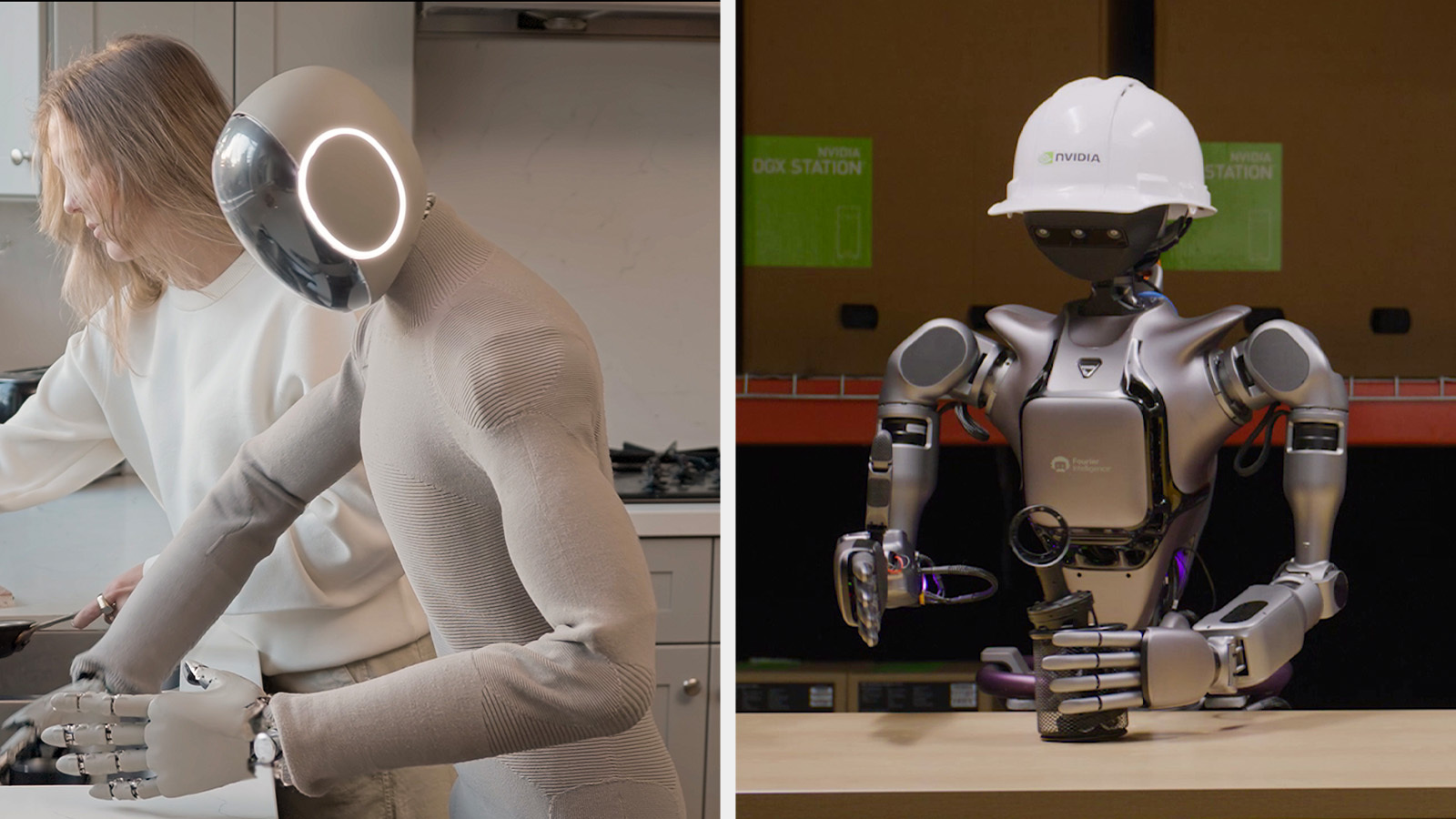

If you’re not still reeling at that, get ready for Nvidia’s incredibly named Isaac GR00T N1. Touted to be the first open and customizable foundation model for generalized humanoid reasoning and skills, this will teach your robot exactly what to do when an apple falls on their head. Invent gravity.

“With NVIDIA Isaac GR00T N1 and new data-generation and robot-learning frameworks, robotics developers everywhere will open the next frontier in the age of AI.” says Huang.

It works by splitting tasks into two different categories, one for immediate and fast reactions, and others for more thoughtful reasoning. These can be combined to do things like look around a room and immediately analyse it, and then perform specific actions capable of the specific robot. These are just the first in a series of modules that Nvidia is planning to pretrain and release for download.

These keynotes are always squarely aimed at developers and enterprise users rather than the average gamer, but they also point to future technologies that could wind up anywhere, including gaming. For Nvidia it looks like we can expect the company to go all in on further AI development, and most of it looks like it’s being put to good use. Less AI for art purposes, more for improving graphics, efficient storage, complex programming, and, of course teaching robots how to grab stuff.