The trick, it turns out, is to use an awful lot of clever and complicated math.

Spell checking is such a ubiquitous feature of today’s software that we expect to see it in browsers and basic text editors, and on just about every computing device. However, 50 years ago, it was a significant challenge to implement due to the paucity of memory that affected even the supercomputers of the era. The solution was so effective that the core technology developed—a lossless compression algorithm—is still in use today.

Okay, so a five-decade computing story is hardly ‘news’ but thanks to a timely reminder of the work at Hackaday, we felt that it was worth sharing and for that, we must turn to Abhinav Upadhyay’s blog on the subject.

It all began in 1975 when programmers at AT&T were looking to implement Unix as a text processor for the company’s patents division. Operating systems aren’t the normal choice for a word processor but hey, things were kinda different back then. One barrier to using Unix in such a role was that it would need to have a fully functional spell-checking system.

The simplest way of doing this is dumping an entire dictionary into system memory and then just having the computer look up the words. However, not only would this be very slow to do, but computers of that time simply didn’t have enough memory to store a dictionary as is. As Upadhyay starts in his blog: how does one fit a 250 kb file into just 64 kB of RAM?

You compress it, of course, but that’s not as easy as it may seem. For example, I can make a 256 kb text file, containing over 260,000 identical characters, and use a file compression tool and the Lempel–Ziv–Markov chain algorithm (LMZA) to squash it down to just 257 bytes, but that’s on a modern computer, with an ultra-fast, multicore processor and huge amounts of speedy RAM—and it’s a useless dictionary.

AT&T were using PDP-11 machines which are light years away in terms of processing power and memory. Searching through such a compressed file on a machine of that age would be unusable in real terms and my squashed-up text file is good for one word only.

The first prototype of a spell checker for Unix took computer scientist Steve Johnson the better part of an afternoon to create and while it worked, it was slow (because it was disk-based) and prone to mistakes. Douglas McIlroy, Unix pioneer at Bell Laboratories, then took up the gauntlet and tackled the problem on two fronts: an algorithm for reducing the size of words in the dictionary and a data structure for the dictionary so that it could fit into a few kB of system memory.

Updadhyay goes through all the mathematics behind both mechanisms and it’s fascinating reading, although it does require a thorough grounding in math and computer science to stand any chance of understanding.

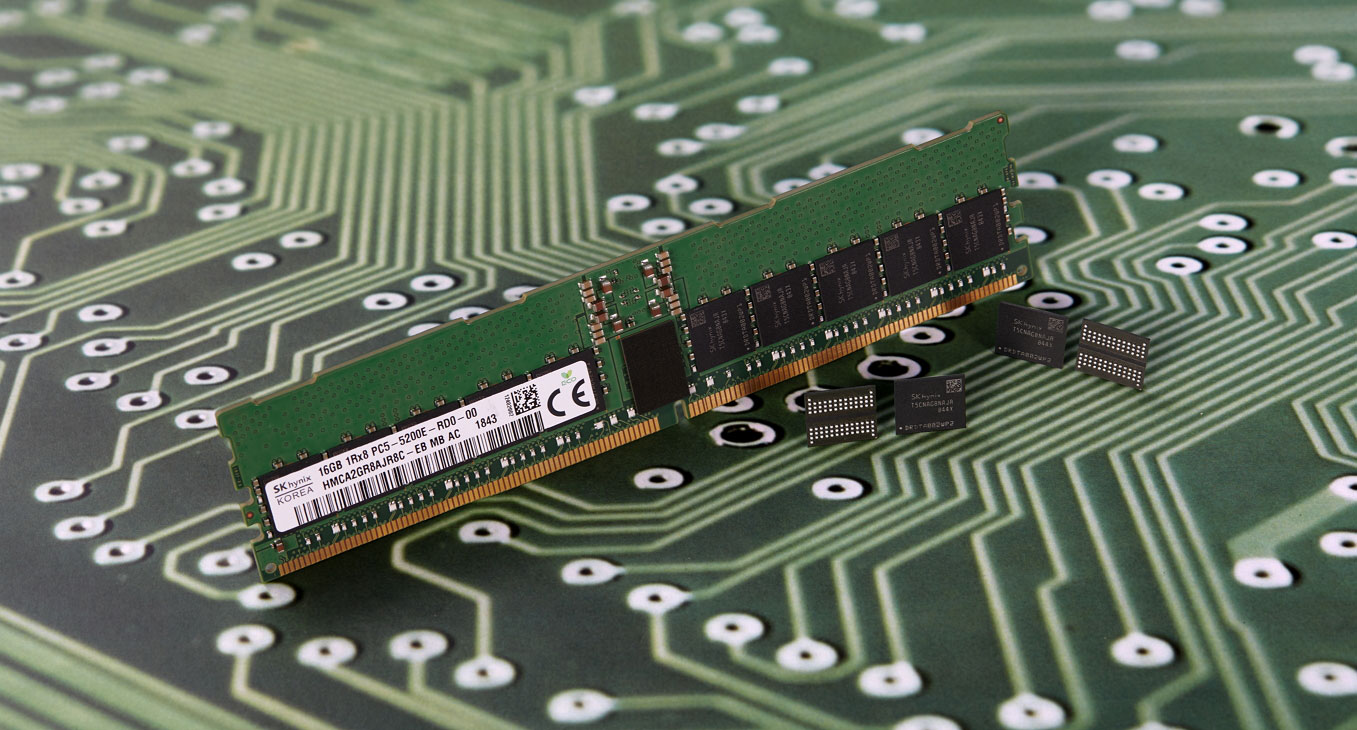

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

What matters is the end result. McIlroy’s work ultimately resulted in an algorithm that required an astonishing 14 bits of memory for each word; a dictionary with 30,000 entries would take up a fraction under 52 kB. The theoretical minimum size with the system is 13.57 bits (the higher memory usage was to make it faster to look up words) so it’s fair to say that McIlroy did an incredible job. That level of usable compression remains unmatched to this day, in terms of how close it gets to its theoretical limit.

Part of his solution involved the use of Golomb coding, a form of lossless compression that’s still in use today, in the form of Rice coding, as used in FLAC, Apple Lossless, and Lossless JPEG. Modern spell checkers work very differently, of course, and they’re certainly not under the same processing and RAM constraints that Johnson and McIlory were—Notepad, for example, requires 32 MB of memory just for itself and that kind of RAM could only be dreamed about in the 1970s.

It’s proof, if any was ever needed, that the most creative programming solutions are born under the duress of hardware constraints. Today’s computers are so capable, with such vast amounts of resources to hand, that many solutions are of the form ‘it just works’—while I’m not suggesting we should go back to a world of kHz clock speeds and pitiful amounts of memory, I do wish software companies would take a leaf from early programmers on how to maximise what you’ve got.