And it's not just because the technology is brand new.

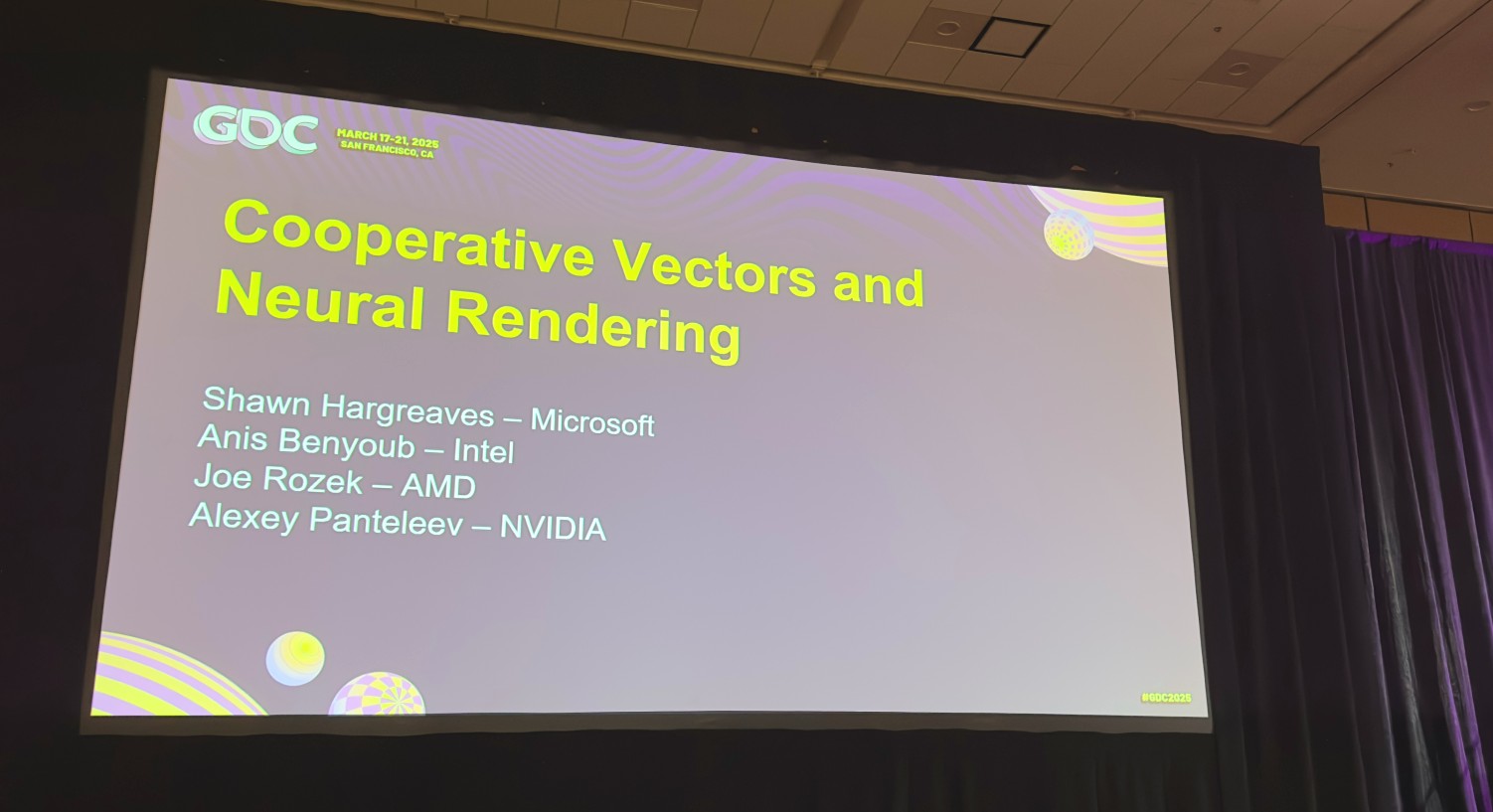

At this year’s Game Developer Conference in San Francisco, Microsoft the three biggest GPU manufacturers kicked off a week of lectures on advanced graphics techniques with an introduction to the next ‘big thing’: cooperative vectors. But for all the promise the new feature offers, it’ll be a good while before we see them making a big difference in the games we’re playing. Not least because one GPU vendor has only just joined the matrix core gang.

If you’re wondering just what exactly cooperative vectors are, then you’re in good company, because despite announcing them earlier in the year, Microsoft has done a pretty rotten job at describing just what they are, how they work, and what you can really do with them. Fortunately, Microsoft’s head of DirectX development, Shawn Hargreaves, offered a nice overview of the forthcoming feature to its graphics API. He’s a Windows guy, but it’s also worth noting that it will be coming to Vulkan, too.

Cooperative vectors is the name for an additional API to the DirectX 12 family, that will allow programmers to directly use a GPU’s matrix or tensor cores, without having to use the vendor’s specific API. For example, in the case of Nvidia’s GeForce RTX cards, the Tensor cores automatically handle any FP16 calculations but otherwise, you need to use the likes of CUDA to program them. The DX12 CoopVec API gets around this issue, as it will be supported by AMD, Intel, Nvidia, and even Qualcomm.

You could argue that CoopVec is really just a bunch of extra HLSL (High Level Shader Language) instructions that are specific to matrix-vector operations, which tensor/matrix cores are designed to accelerate. But a better way to look at the API is that it is all about bringing the world of ‘little AI’ into shaders.

Along with Microsoft, representatives from AMD, Intel, and Nvidia all talked about what they were developing with CoopVec. In the case of the latter, we’ve already heard about them: RTX texture compression, RTX neural materials, and RTX neural radiance cache. Hargreaves described current developments as the ‘low hanging fruit’ of what can be achieved with CoopVec but that it’s also very early days as to what can be done with the new API. The general idea, though, is to use a small neural network, stored on the GPU, to approximate something that would otherwise be very expensive to do, shader-wise or data-wise.

Like Nvidia, Intel has been working on its own neural texture compression algorithm, though it’s technically based on something that Ubisoft developed. Intel’s system is a little different to Nvidia’s but they’re both aimed at the same end result—reducing the memory footprint and bandwidth requirements for highly detailed materials. A metal surface, that’s scratched, eroded, and marked with dirt will be rendered using multiple, high-resolution textures and the standard method of compressing this isn’t perfect, in terms of visual quality and the compression ratio.

Neural compression works best on materials with many layers to them and both Intel and Nvidia ran a couple of demos showcasing significant VRAM loads. However, there is a small performance penalty to using the technique and it’s not really usable on the majority of texture loads. So if you were hoping that neural texture compression would remove the need for many GBs of VRAM, then you’re out of luck.

That was the only thing Intel showed off in the talk and AMD didn’t have much to say either. Its speaker, Joe Rozek, went through something entirely different: the neural lighting system used for its recent Toy Shop demo, yes, the one which left our Andy a bit underwhelmed. Rozek somewhat quietly pointed out that CoopVec is currently only supported on its latest RNDA 4-power Radeon RX 9070 graphics cards, though AMD is looking to see if older Radeons can support it too.

There was no sign of Qualcomm at GDC 2025 but Microsoft said that it was working on supporting the API for its Snapdragon X chips.

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

But while I genuinely think that CoopVec is great, it’s also long overdue and in part, that’s AMD’s fault for taking so long to add discrete matrix units to its consumer GPUs. I’m also disappointed by how little AMD and Intel had to show at the GDC, compared to Nvidia.

Given that Intel’s Arc GPUs have always sported matrix cores, you’d think it would have a bit more going behind the scenes. Perhaps it does and we may see something more comprehensive in the near future and at least AMD does have a full demo of AI-boosted rendering.

For the moment, it’s Nvidia that’s—once again—deciding what the future of real-time rendering is going to look like. Developers will be able to get their hands on a preview version of Microsoft’s CoopVec API fairly soon but the full retail release isn’t planned until the end of the year, at the earliest. Let’s hope the uptake of CoopVec in games is faster than DirectStorage was, yes?