Whether this is semantics or something more meaningful remains to be seen.

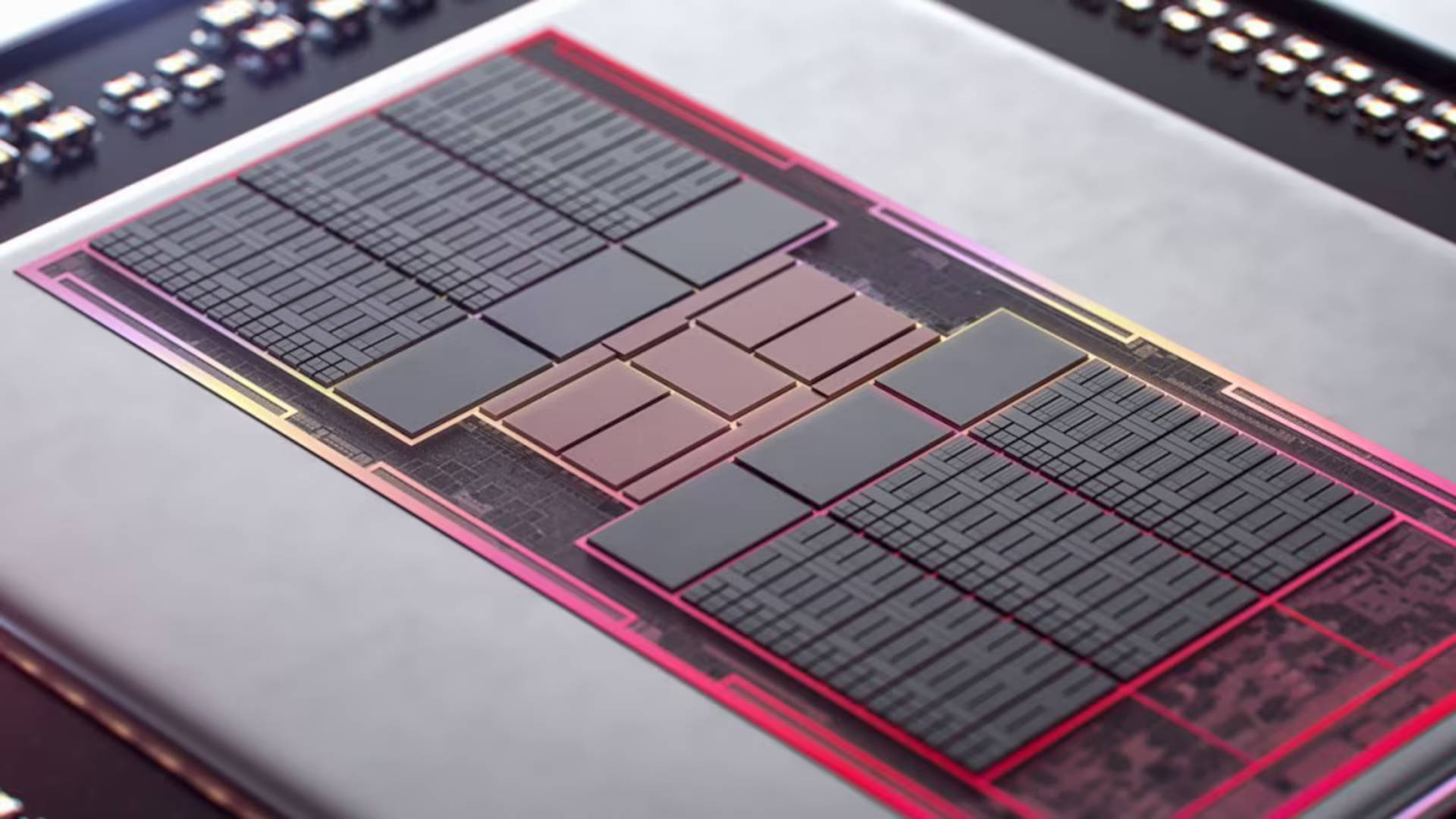

AMD hasn’t even got its next-gen RDNA 4 gaming GPUs out the door, but already there are rumours RDNA 5 has been cancelled in favour of the “unified” UDNA graphics architecture. If true, the move could be all about adding AI capabilities to AMD’s gaming GPUs as soon as possible.

AMD’s current gaming graphics cards are based on the RDNA 3 architecture, while its enterprise, datacenter and AI GPUs are based on the CDNA technology. The idea is that the demands of gaming and data centers are sufficiently divergent to merit separate architectures.

At least, that was the narrative until September, when AMD announced plans to unify RDNA and CDNA into a single “UDNA” architecture. At the time, AMD said the new approach would be much easier for developers, though that implies that there are software developers creating games and, say, large language models at the same time, which seems unlikely.

Whatever the merits of the new approach, expectations in September were that UDNA would probably take a little while to hit gaming PCs. RDNA 4 is coming early in the new year and much had already been rumoured about its supposed but as yet unofficial successor, RDNA 5.

So, the assumption was that gaming graphics would make the jump to UDNA after RDNA 5. But no, not according to the latest rumour on Chiphell (via PC Guide). Established leaker zhangzhonghao claims that RDNA 5 is toast and that AMD is readying a UDNA-derived family of GPUs to succeed RDNA 4.

What’s more, the poster says that UDNA will actually be GCN derived. Say what? GCN is the AMD graphics architecture that preceded RDNA and first appeared in 2012 in the Radeon HD 7000 family of GPUs. But GCN did not, in fact, die with the release of RDNA in 2019. Instead, it forked off into CDNA. So, if you can forgive all the acronyms, the idea is that UDNA is a development of CDNA, which in turn has its origins in GCN.

Now, it’s worth pointing out that the notion of “unified” graphics architectures isn’t quite what it first seems. It doesn’t mean that AMD will be selling the same chips to gamers as AI developers.

There’s lots of stuff related to the rendering pipeline and video output in a gaming GPU that doesn’t go into an AI GPU, including raster and geometry hardware, render output, 2D engines, hardware video encode and decode, and so on.

What were really talking about is a shared architecture for the shader ALUs and the AI-accelerating matrix cores that Nvidia calls Tensor cores and are the cores found in various NPU or neural processing units. That’s quite a lot for even a gaming GPU these days, what with the shaders being so important, of course. But it’s by no means the whole chip.

Anyway, while a move to a “new” architecture that’s based on the “old” GCN technology superficially seems like a retrograde step, once again it’s hard to draw too many conclusions. The relationship to GCN may be more of a high-level philosophical approach than carrying over the nuts and bolts of the GCN architecture circa 2012.

Moreover, at this stage it’s really impossible to say for sure what the pros and cons of AMD’s new unified approach will be for gamers. However, perhaps the most obvious upside will be added AI smarts.

Right now, AMD doesn’t use any AI acceleration in its graphics cards to process or enhance its FSR upscaling tech. That’s quite a contrast with Nvidia, which leans into AI heavily with DLSS and bigs up the contribution of the Tensor cores built into the last three generations of Nvidia gaming GPUs.

Indeed, current RDNA 3 GPUs do not have matrix cores at all. But CNDA GPUs do, and UDNA GPUs surely will, too. In other words, UDNA gaming GPUs will be entering the AI age at last. There have been big hints that AMD’s FSR is going to start matching up with Nvidia when it comes to using dedicated silicon to effect its upscaling and frame generation features, which means future GPUs will need to feature tech the current ones really don’t. And, when you think of it in those terms, maybe AMD needed to move to UDNA ASAP, after all.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.