Adios Apple and Microsoft, you're old news.

Remember when Nvidia just made gaming graphics cards? Hard to imagine many at the company do as Nvidia yesterday achieved the remarkable status of “world’s most valuable company”, overtaking Apple and Microsoft.

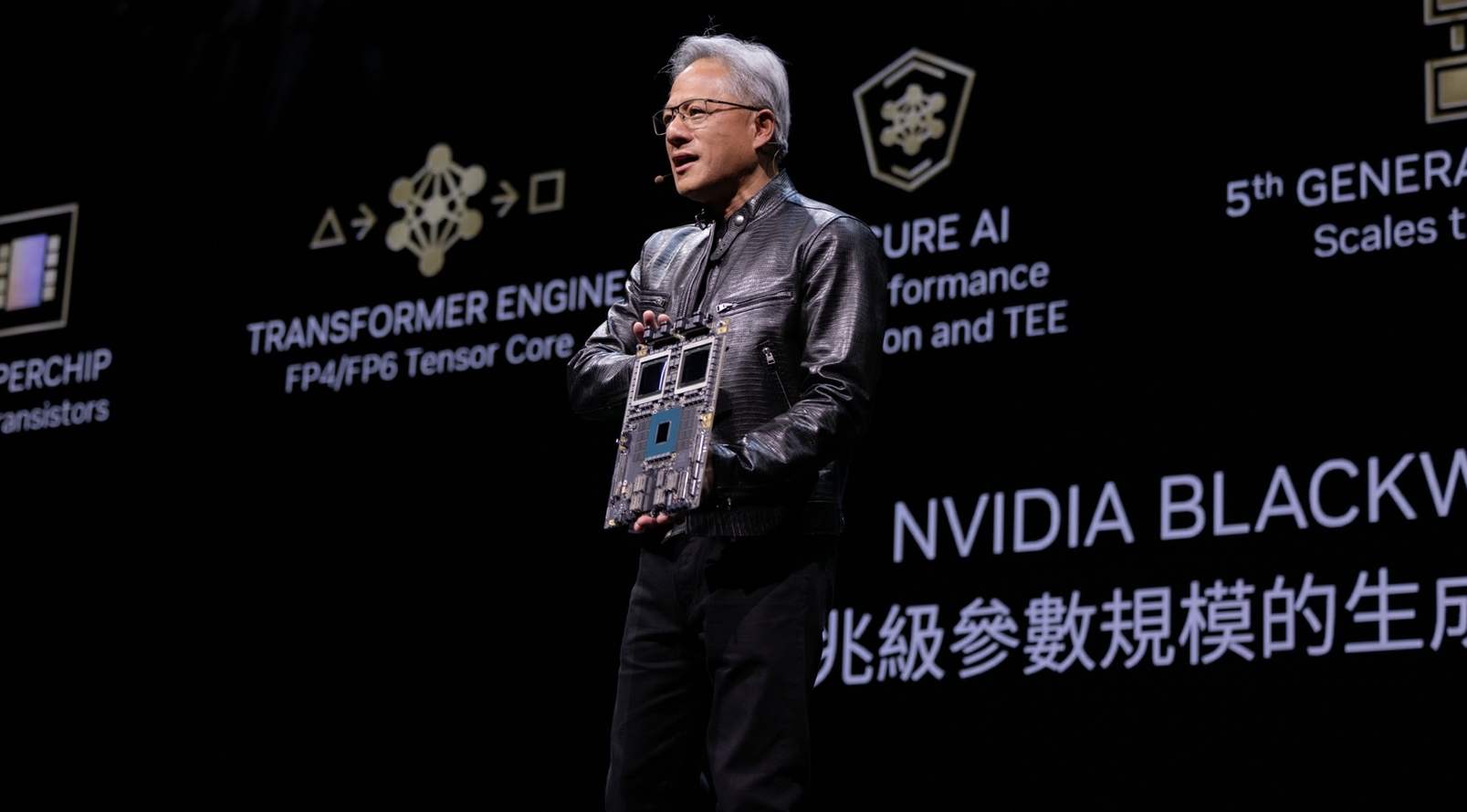

That’s nice and all, but will somebody please think about PC gamers? Actually, Dave put pretty much that very question directly to His Royal Leather Jacketness, AKA Nvidia’s CEO Jensen Huang, after Nvidia’s recent Computex keynote. But hold that thought while we cover off some details. That valuation is based on Nvidia’s current share price and thus market capitalisation.

Obviously, such valuations can change pretty quickly. How long Nvidia will retain its new crown is anyone’s guess. But as of market close yesterday, Nvidia’s share price of $135.58 works out to a market capitalisation of $3.335 trillion. Yes, trillion.

Of course, both Apple and Microsoft are also worth over $3 trillion in market cap terms. So, it’s more a case of a trio of titans than Nvidia being in a class of its own. Its status as the most valuable company on paper is pretty notional if not ephemeral. But still, the symbolism of this development is significant.

It is, of course, all driven by Nvidia’s success in AI. Exactly how that plays out in future is hard to say. Will Nvidia continue to dominate? Will the likes of Microsoft and Google succeed in building their own AI chips, reducing their dependence on Nvidia?

Might the whole AI thing turn out to be a bit of a ruse? Who knows, though it’s worth recalling how quickly cryptocurrency mining came and went as a driver of demand for Nvidia GPUs. What we can say is that we remember the early days of what Nvidia used to call GPGPU or general purpose computing on graphics chips and how that slowly transitioned into the AI juggernaut we have today.

Funnily enough, for years and years nary a mention did Nvidia make of AI. They talked about things like physics simulation, prospecting for fossil fuels, folding proteins, that kind of thing.

The notion of running AI on its GPUs is a relatively recent phenomenon. So, don’t believe claims that Nvidia saw this all coming. For sure, the company put the time and effort into parallel computing on GPUs that no other company could be bothered with. So, Nvidia deserves this success. But when it started off down a road originally called GPGPU, Nvidia didn’t see AI coming.

Of course, when that journey began Nvidia was very much a gaming graphics company. These days, Nvidia likes to style gaming graphics as merely the first application it happened upon for its massively parallel approach to compute.

The RTX 4060 Ti: The downsized shape of things to come? (Image credit: Future)

Personally, I think that’s a rewriting of history given that Nvidia’s early graphics chips were pretty much pure fixed-function raster hardware with virtually no general purpose compute capacity. But history is written by the victorious, so fighting that battle is a guaranteed loss.

More of a concern is Nvidia’s current attitude to PC gaming. No question, the company continues to invest in gaming graphics, and heavily. The problem is that most of the focus seems to be going into the software side in the shape of its DLSS suite of largely AI-accelerated rendering enhancements including upscaling and frame generation.

For sure, those technologies can be pretty magical. But you could also argue they are being used to deprecate the importance of the actual hardware. That’s why the pure rasterisation power of many of Nvidia’s latest RTX 40 series GPUs were relatively disappointing. Nvidia now relies to a greater and greater degree on those clever AI features to deliver higher frame rates.

Meanwhile, despite Intel’s recent efforts the competition which amounts pretty much exclusively to AMD, which has struggled to compete with Nvidia’s DLSS feature set and quality. In that regard, AMD is always a step behind and playing catch up.

If you take something like AMD’s Radeon RX 7800 XT GPU, it’s extremely competitive for plain old raster performance. But falls ever further behind as soon as you start factoring in fancier features.

Of course, Nvidia isn’t entirely neglecting the hardware. It’s been iterating its ray tracing GPU technology for three generations now, another area where it has a clear advantage over AMD.

But Nvidia’s shift in emphasis is clear enough. AMD can compete when it comes to conventional pixel pumping. But Nvidia’s huge advantage in AI is something that no company can currently match. So, that’s where Nvidia seems to be putting the emphasis with its gaming GPUs.

As for the future, there’s no reason to think Nvidia is going to actually ditch gaming graphics. But it’s probably a safe bet to assume that the company will increasingly view gaming through the lens of AI. And that will likely be a blessing and a curse.

(Image credit: Future)

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

The blessings will be even more remarkable additions to the DLSS family of image-enhancing technologies. The curse will be an even less competitive landscape for gaming graphics where Nvidia can dictate the market to an even greater degree.

That explains why, if true, Nvidia has very modest plans for its next generation of GPUs in pure hardware terms. If the rumours are to be believed, some members of the next-gen RTX 50 series will actually see regression in terms of things like shader core counts, while memory availability and bus widths look set to stagnate for yet another generation.

If that happens, it’ll be pretty depressing and again it will all comes back to AI. So as a PC gamer, and despite how magical some of Nvidia’s gaming technologies undoubtedly are, it’s hard not to feel a little uneasy about Nvidia’s new status as the world’s most valuable company. It’s a huge win for Nvidia, but what it means for PC gaming is a lot more ambiguous.