The project is available online if you fancy giving it a go yourself.

Anyone who has studied electrical engineering will know that with the right tools, know-how, and a lot of perseverance, it’s possible to design a basic CPU from scratch. It won’t be anything like today’s processors, of course, but what about GPUs? Surely they can’t be any different, yes? Well, one determined software engineer decided to do just that but found out that it’s much harder than you’d think.

Adam Majmudar has been chronicling his trials and tribulations on Twitter (via Tom’s Hardware), having first started with learning the fundamentals behind central processor architecture, before moving on to creating a complete CPU. It’s obviously nothing like the chips you can buy for your gaming PC and it reminds me a lot of basic 4-bit processors I learned to design many decades ago.

I’ve spent the past ~2 weeks building a GPU from scratch with no prior experience. It was way harder than I expected.Progress tracker in thread (coolest stuff at the end)👇 pic.twitter.com/VDJHnaIhebApril 25, 2024

Modern software packages and hardware description languages simplify the process quite a bit, but it’s still a mighty challenge.

Flushed with success, Majmudar then decided it was time to do the same with a GPU. After all, the basic structure of a shader unit is nothing more than an arithmetic logic unit, with some registers to store data, another unit to load and store said data, and something to manage the whole process of doing an operation.

The engineer rapidly realised that while CPUs and GPUs do share a lot of common aspects, the latter are very different in how they use memory and manage threads. It’s also worth noting that Majmudar wasn’t aiming to make a ‘graphics’ GPU but rather a GPGPU—in other words, he wasn’t designing systems like triangle setup, TMUs, ROPs, or any of the numerous fixed function circuits that the chips in graphics cards have.

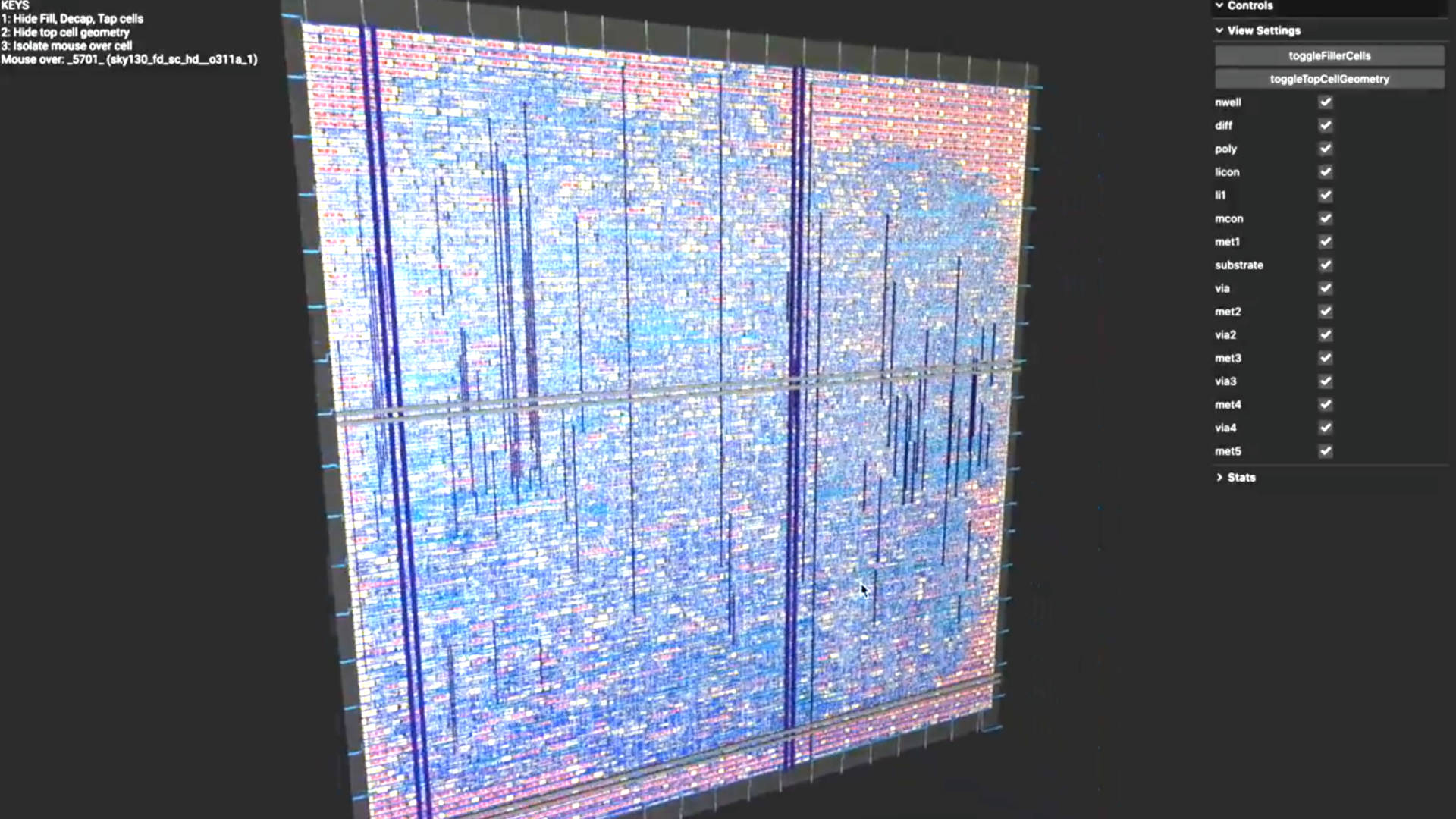

But, to his credit, his basic GPU design ultimately worked after solving a number of issues with the help of others and was able to run a small number of instructions and crunch through some matrix calculations in software simulation. His CPU and GPU designs will get put into a physical form via the Tiny Tapeout project.

The best part about Majmudar’s work is that he’s shared the whole project on Github, to have a resource that anyone can use if they want to know more about how a GPU works in the depths of its hardware.

Anyone can learn to program a GPU, simply because all of the necessary tools are readily available online, along with a raft of tutorials and exercises to follow. Learning how to design a shader unit at a transistor level is a different thing entirely, as none of the big three chip makers (AMD, Intel, Nvidia) share such information publicly.

Now, if you’ll excuse me, I’m off to design a GPU with 100,000 shaders. Wonder what name I should sell it under—Raforce? Gedeon? Knowing my skills, GeFarce will be more appropriate, I think.