Does an NPC that reacts to anything I say into a microphone actually lead to more player agency?

My favorite news story of 2023 had nothing to do with AI—or at least I didn’t think it did until recently, when I tried two demos from developers that are using parts of Nvidia’s “digital human technologies suite” to “bring game characters to life.” The story I’m talking about is this one: How deep was Deus Ex’s simulation? A literal piece of paper could set off a laser trap. It is about a 24-year-old videogame, and specifically something so obscure and unlikely happening in that videogame that someone had just seen it for the first time, decades later.

By today’s standards Deus Ex is crude in many ways: Small environments, chunky graphics, awkward controls. But it’s also a triumph of design, with every tiny gear within it crafted to interlock with half a dozen other parts in ways both intentional and unintentional-yet-logical. Were Deus Ex’s designers planning for that piece of paper to set off that laser trap and blow someone up? No, but they built a world where even that unlikely interaction plays out in a “realistic” way. The reason we harp on about Deus Ex and other immersive sims is that more than most videogames, they’ve gone to great lengths to give the question “will this work?” a logical, meaningful, and interesting answer.

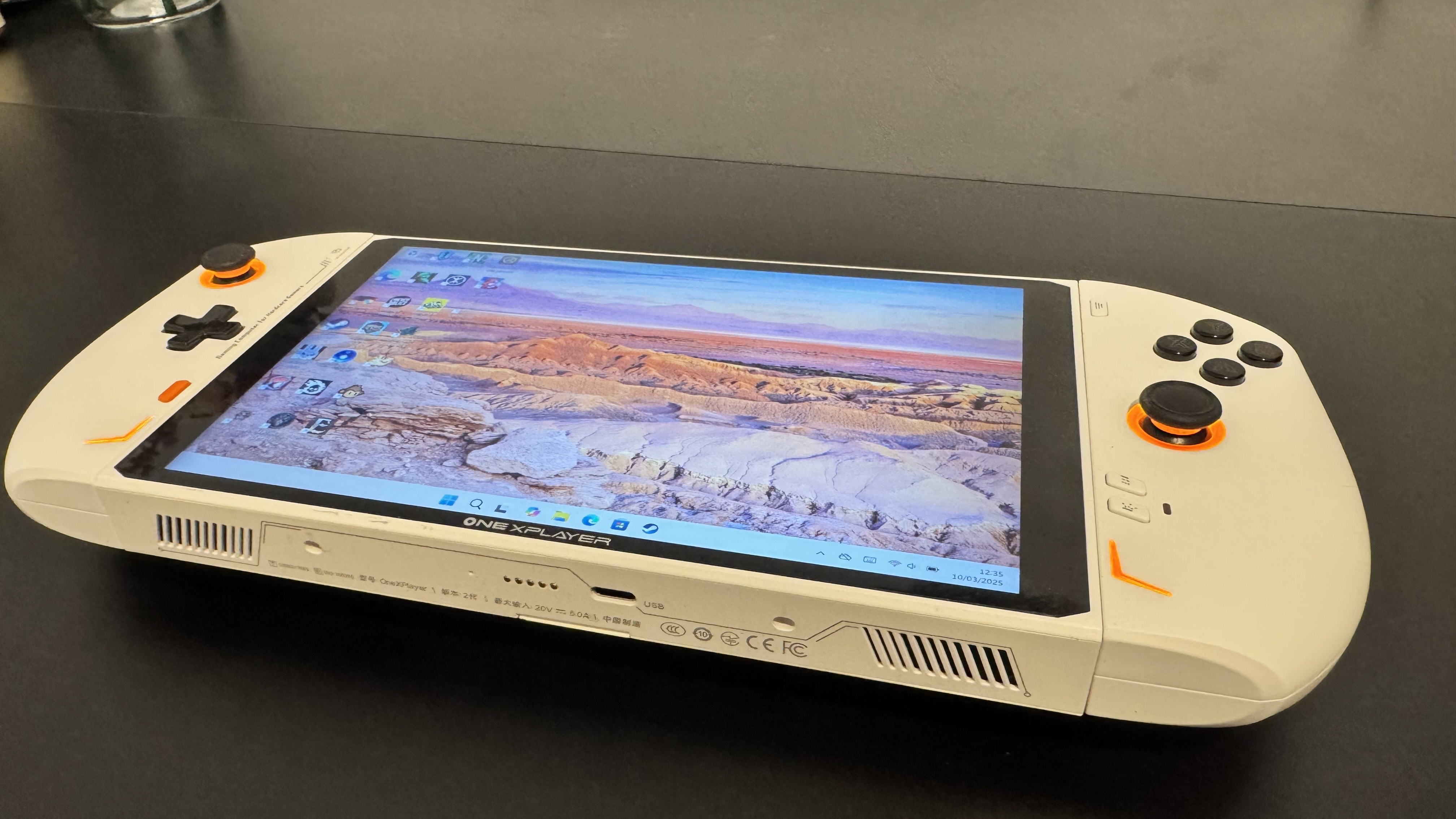

The great immersive sims are still impressive decades after they were made, something that was reinforced for me at the Game Developers Conference last month when I played demos powered by Inworld AI, which calls itself “the leading AI engine for games.” The demos hyped up the open-ended wow factor of being able to actually talk to an NPC—I spoke into a microphone, and the game processed my voice like Siri would on an iPhone, before the NPC spoke back. It’s a neat trick. Impressive, even!

But I came away from the demos struggling to see the technology amounting to much more than a gimmick, skeptical that the wide open possibility space of “say anything you want” will translate into meaningful interactions, because developers still have to plan for how any given conversation ties into the rest of the game. The more I thought about them, the more it felt like an incredible amount of money and effort being spent to chase the wrong sort of believability—”natural” but ultimately hollow conversations—when those vast resources could be dedicated to a world as densely reactive as Deus Ex’s, instead.

NPCs vs. the world

Inworld AI uses large language models to allow NPCs to react to dialogue and spit out their own unscripted replies; some underlying Nvidia tech handles the speech-to-text processing and facial animations. Their voices were supplied by actors who recorded enough lines for the AI to then simulate an artificial voice, and the performance was honestly impressive—I’ve heard worse delivery in games before.

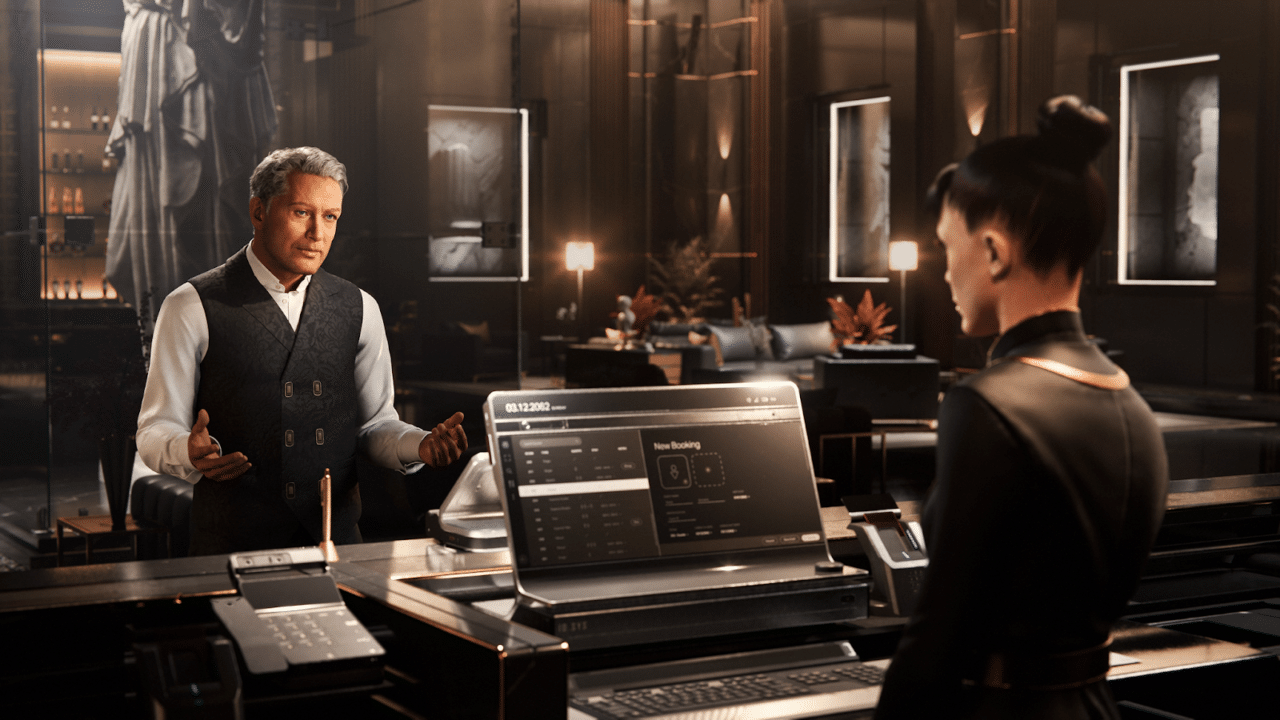

In one demo called Covert Protocol, I wandered a shiny (RTX ON!) hotel lobby in what felt a bit like an adventure game sequence, looking for clues and a couple key items that would help me make my way to another floor to do some spy stuff. I could talk to the desk attendant and a surly man named Diego, who was getting ready to speak at a conference. Tricking Diego, or ingratiating myself to him, was the key to getting a bit of information I needed to progress. Instead of choosing from a variety of pre-written dialogue options I could say whatever I wanted, so I immediately tried to poke holes in the simulation. I asked Diego what his favorite Taylor Swift album was. He clearly understood the gist of the question—but instead of saying Taylor’s name or referencing a specific album, said he didn’t have a favorite, and then grumpily reminded me he was prepping for his speech.

There was some potential for cleverness here—lying about my identity, pressing for information in different ways—and interacting with my actual voice felt immersive in a way picking dialogue options usually doesn’t, though a noticeable few seconds of processing lag did really diminish how “natural” each exchange felt. It was also hard to shake the feeling that I was talking to an empty vessel that existed for me to probe, rather than a character with things to say crafted by a human writer. But I could see writers crafting many set lines of dialogue for NPCs, then using this sort of AI to “fill in the gaps” with additional responses they hadn’t planned for.

What’s harder for me to picture is how any interaction I have with one of these NPCs would make most videogames any better. In this buzzword-heavy promotional video for Covert Protocol, Inworld’s CEO says that their platform “unlocks groundbreaking game mechanics” and that “every player will have a unique experience of Covert Protocol because NPCs dynamically adapt to the decisions and actions they take in the game.” To those points, I’d say: One, hey man don’t forget about Seaman, and two, how many of those “unique” experiences will actually matter?

In Covert Protocol, anything I said in conversation with Diego ultimately had to funnel into a couple very limited outcomes: I got some bit of info from him, or got him to go talk to the desk clerk, allowing me to overhear something that would help me progress. In the second demo, one made by Ubisoft but running on the same Inworld AI tech, I chatted with an NPC to plan a heist, but she shot down most of my ideas in an agonizingly slow back-and-forth conversation until I made the specific suggestions she wanted to hear. It was frustrating, and of course a demo is not representative of how much better this technology could be someday. But think about it: of course the AI couldn’t take my suggestion seriously if I said “let’s parachute onto the roof from a zeppelin” if Ubisoft hadn’t already designed a zeppelin and parachuting sequence into the game; all of that conversational freedom still has to narrow down to a select set of actions or solutions.

And that’s jarring—it highlights the fact that you can say whatever you want, but you really can’t do whatever you want. Even if an NPC can say something surprising to me, my player agency remains just as hemmed in. Yes, human-written dialogue inevitably provides fewer options, but as a player I know all of those options matter in some way. In immersive sims especially, I know that the dialogue options I can choose from are part of the confines of the game world, but within those confines everything from an intimidation check to a stackable crate is a potential tool at my disposal.

Having those clearly defined limits, rather than illusory freedom, actually makes the world feel more “real” in its own way, or at least cohesive.

(Image credit: Inworld AI, Ubisoft)

The essence of a great immersive sim is that the designers have created for the player a variety of interesting approaches to a situation, with certain explicit levers they can manipulate, but there are also the emergent elements, like physics, that let really creative players solve problems in ways the developers didn’t explicitly design for. “Thinking outside the box” lets us discover undesigned-for solutions—but it’s hard to picture that working with these sorts of AI dialogue systems when the developers still have to curate the possible outcomes.

So what does the creator of Deus Ex think?

I put this skepticism to Deus Ex director Warren Spector, who happens to be working on an upcoming immersive sim of his own (though not using any of this AI technology). He’s actually more optimistic about it than I am, though he cautioned he’s far from an expert on the ins and outs of AI. Spector told me over email that he’s “been complaining since 2008 about how terrible game conversations were” and still are, without much having changed since the ’90s. Most games still use branching dialogue trees to represent reactivity, he pointed out.

“Our lack of progress in NPC interaction is nothing short of pathetic (to be fair conversation is a tough problem and I don’t know how to solve it myself),” he said. “But branching trees turn conversation into puzzles. They’re boring. And generating enough dialogue to fail to satisfy players is time-consuming and costly. Back in ’08 I said we needed natural language processing and sophisticated AI to make real progress. That being the case I’d be a hypocrite if I said I wasn’t at least curious! The big thing for me is that any AI we’d use would have to be trained exclusively on game-relevant information. You don’t want an NPC in a fantasy game talking about Taylor Swift.”

(To be fair to Inworld here, the company’s AI tech includes a feature it calls Fourth Wall that’s “designed to make sure your AI NPCs and AI characters always say a world-appropriate response—no matter how often players try to get them to trip up.” In practice, I do wonder if that’s going to lead to a lot of bland deflections, like the one Diego gave me about his favorite album).

My pessimistic view of developers having to account for players being able to say anything to NPCs was that those open-ended conversations would ultimately have to lead to a disappointingly limited set of outcomes. Spector doesn’t see that as a given, but he doesn’t think AI automatically opens the door to lots more player agency, either.

“I wouldn’t say AI would, in and of itself, limit emergent possibilities. No one really knows what impact a more freeform approach to conversation and overall behavior would have,” he said. “Anyone who says they know is way smarter than I am (or delusional…).

“Player agency is a big deal and there are lots of ways to accomplish it. If you’re crazy you can script a lot of player options. If you’re less crazy you can simulate physical forces and build game systems that allow players to use systemic possibilities, tools, the world itself, and their own wit to deal with game challenges. The question is whether there are other things to simulate beyond physical forces. There’s some great imagination and design work to throw at that. I can see ways AI could help with it. (Remember I’m not an expert on this and people who are will probably get a giggle out of my thoughts!)”

While I get Spector’s frustration at videogame dialogue being largely unchanged since the ’90s, I actually like the fact that conversations can deliver human emotion while also serving as a form of puzzle. Immersive sims are impressive because of how much they let you do within their limits, and those limits are vital. In the same way that a bigger open world isn’t always better, a lot of ultimately empty interactions aren’t going to be better than a few tightly designed meaningful ones once the novelty wears off.

NPC AI tech like Inworld’s will surely get better, and I could see it being used in interesting ways in experimental games—a next-gen take on Facade, perhaps. But the demos I played only managed to convince me that the way to make a game feel properly “real” will always be to follow the teachings of the immersive sim. Craft lots of cool possibilities by hand, because generative AI won’t make the game fun for you.