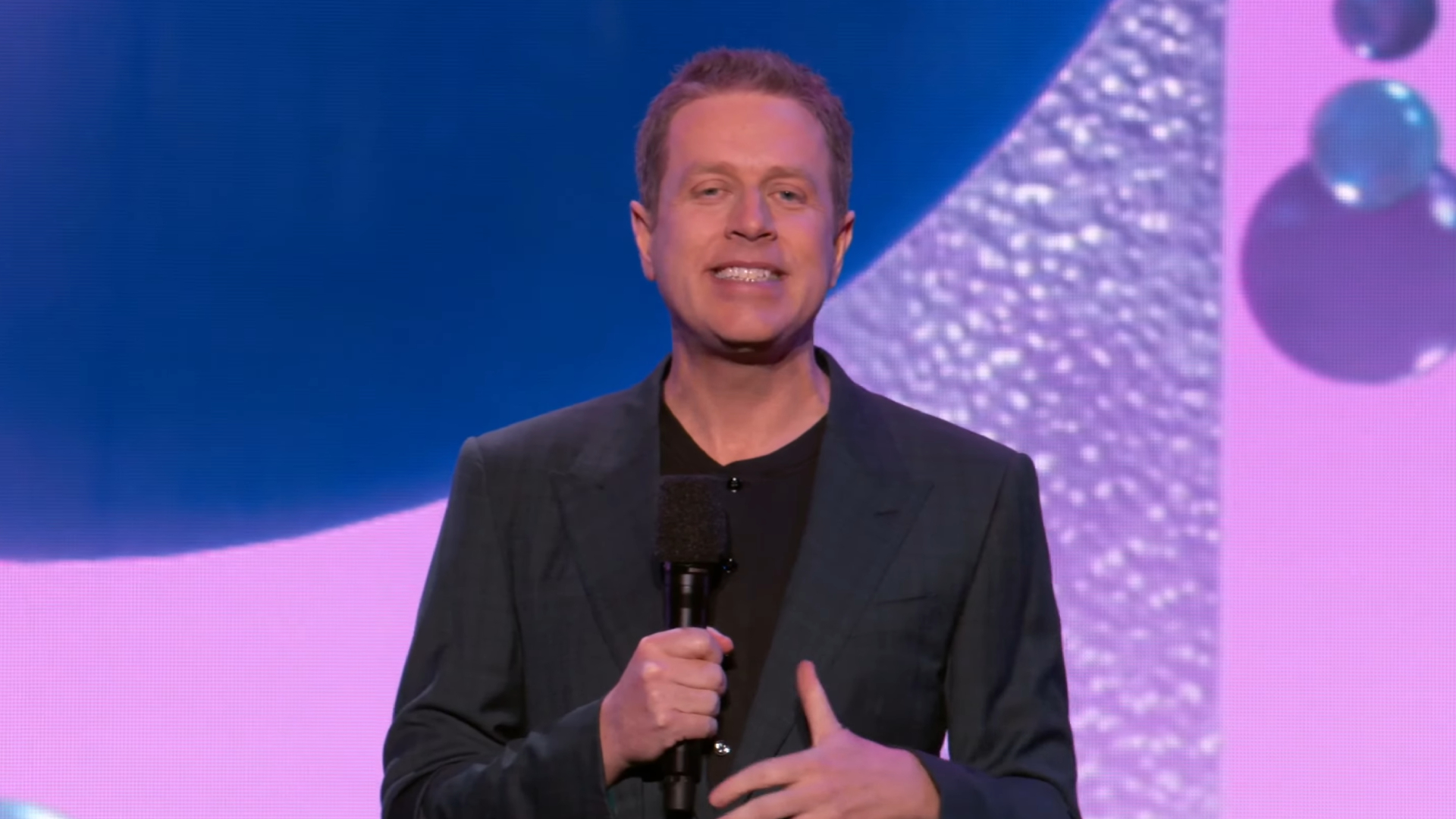

OpenAI's Sam Altman answered lawmakers' questions about the risks of AI and how it should be regulated.

OpenAI CEO Sam Altman, IBM chief privacy and trust officer Christina Montgomery, and New York University professor emeritus Dr Gary Marcus were questioned today by a US Senate subcommittee about the potential risks of artificial intelligence and how it should be regulated. Lawmakers expressed concerns over the potential for AI-generated misinformation and inaccuracies (chatbots have a tendency to lie), political bias, and copyright infringement.

Altman told senators that this developing technology requires “regulatory intervention by governments,” which is “critical to mitigate the risks of increasingly powerful models.” To that end, the OpenAI CEO said that an agency or agencies should be empowered to create and enforce rules for the AI industry, issuing AI companies licenses and revoking those licenses should their technologies run amok.

Dr Marcus also agreed that creating a new government agency would be the best way to regulate AI, saying we “probably need a cabinet-level organization.” He compared AI models to “bulls in a china shop” in his opening statement.

One big concern that came up a lot during the hearing was about AI art generation and copyright. Altman told the senators that OpenAI is working on a copyright system that would pay artists if their works were used to generate new art, saying that “artists deserve control.” Altman also added that new regulations should require AI-generated images to disclose that were created with AI tools.

Why is Altman, the CEO of one of the biggest players in AI, so keen on regulation? Without ruling out the possibility that he has genuine concerns, in the past, powerful companies with lots of resources have called for regulation when they think it’s inevitable and want to influence it, or to make it harder for smaller startups in the industry to break in.

Although he’s pro-regulation, Prof Marcus calls this “regulatory capture”, where, if done wrong, new regulation could inadvertently create an AI monopoly.

Senator Blumenthal, the subcommittee chairman, said that the government could “create ten new agencies, but if you don’t give them the resources,” big AI companies will “run circles” around them.