Can we generate more Witcher content, please?

Runway Research just announced its upcoming generative AI model, Gen-2, which gives creatives the ability to transform words into videos.

Runaway’s Gen-2 video generation model is trained on “an internal dataset of 240M images and a custom dataset of 6.4M video clips.” In other words, the dataset is huge, though there’s no indication as to whether this custom dataset was made by scraping the web for videographers’ work. Maybe not the best thing to use if you’re planning to monetise your generated content.

Still, Runway looks like a pretty powerful evolution of the Gen-1 tool many are already using for storyboarding and pre-production visuals. It’s similar to Meta’s AI video generator though the Gen-2 model looks to have added some really interesting modes.

Soon the tool will let you generate video through text alone. Not only that, there are a few other modes the company is adding to its plethora of video editing tools, including one that will work by feeding the algorithm a video clip and which it can rework in other styles etc.

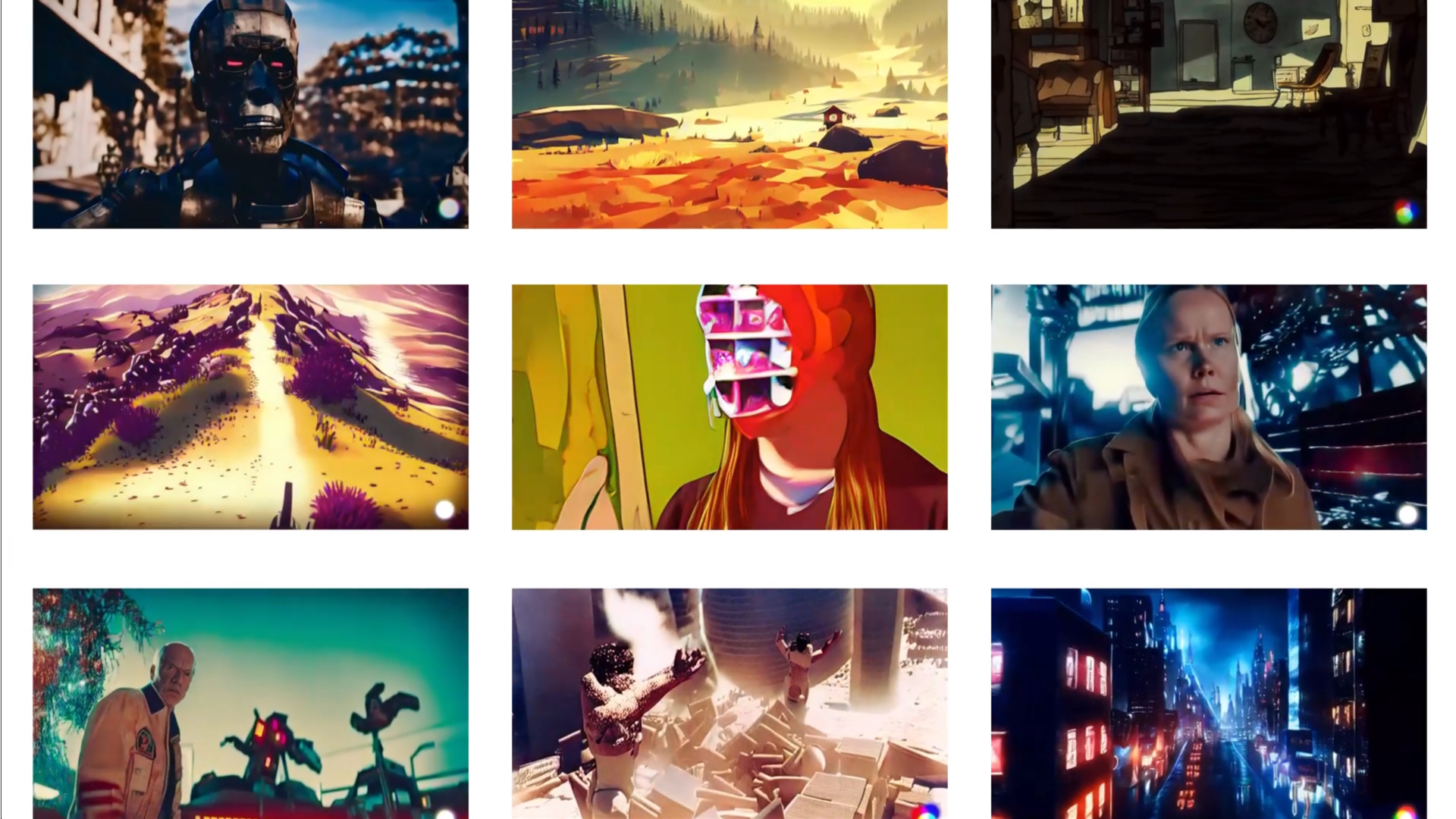

One exciting example in the teaser vid shows a panning shot across some books standing on a table. The Gen-2 model has transformed it into a night time city scene where the books have become skyscrapers, and while it’s not the most realistic shot, it looks to be a powerful tool for visualising ideas.

Generate videos with nothing but words. If you can say it, now you can see it.Introducing, Text to Video. With Gen-2.Learn more at https://t.co/PsJh664G0Q pic.twitter.com/6qEgcZ9QV4March 20, 2023

The accompanying research paper goes into much more detail about the process. It’s come a long way since conception and it’s clear from the teaser video that the realism factor of its output has seen a major hike, though there’s still a way to go before we can generate a feature length Half-Life movie, for example.

It’ll be interesting to see how it does, and the kind of changes it will make to the industry as these models become ever more advanced. As Runway says on its about page “We believe that deep learning techniques applied to audiovisual content will forever change art, creativity, and design tools.”

How the Runway model has changed to become more realistic. (Image credit: Runway)

When you sign up to Runaway, you’ll be presented with the option to nab the free plan plus some paid versions, thought there doesn’t appear to be an option to register interest in the Gen-2 model at this moment. A dive into the Discord channel revealed the company is likely to launch Gen-2 as an open, paid beta, but is currently a bit overwhelmed with interest.

Gen-2 will hopefully come with the ability to train your own custom AI video generator, too, much as you can with many image models.

If you haven’t read Dave’s article where he laid out the process of training a Diffusion model on his Uncle’s art, it can be a rather arduous process, though super rewarding. Hopefully Runway will make the process a little easier.