Here's what inspired Scottie Fox to start this immersive AI tool, and where it might be going in the future.

AI image generation tools are catching the eye of developers across the globe right now, and it was only a matter of time before someone managed to pair the magic of AI image generation with the immersion of VR.

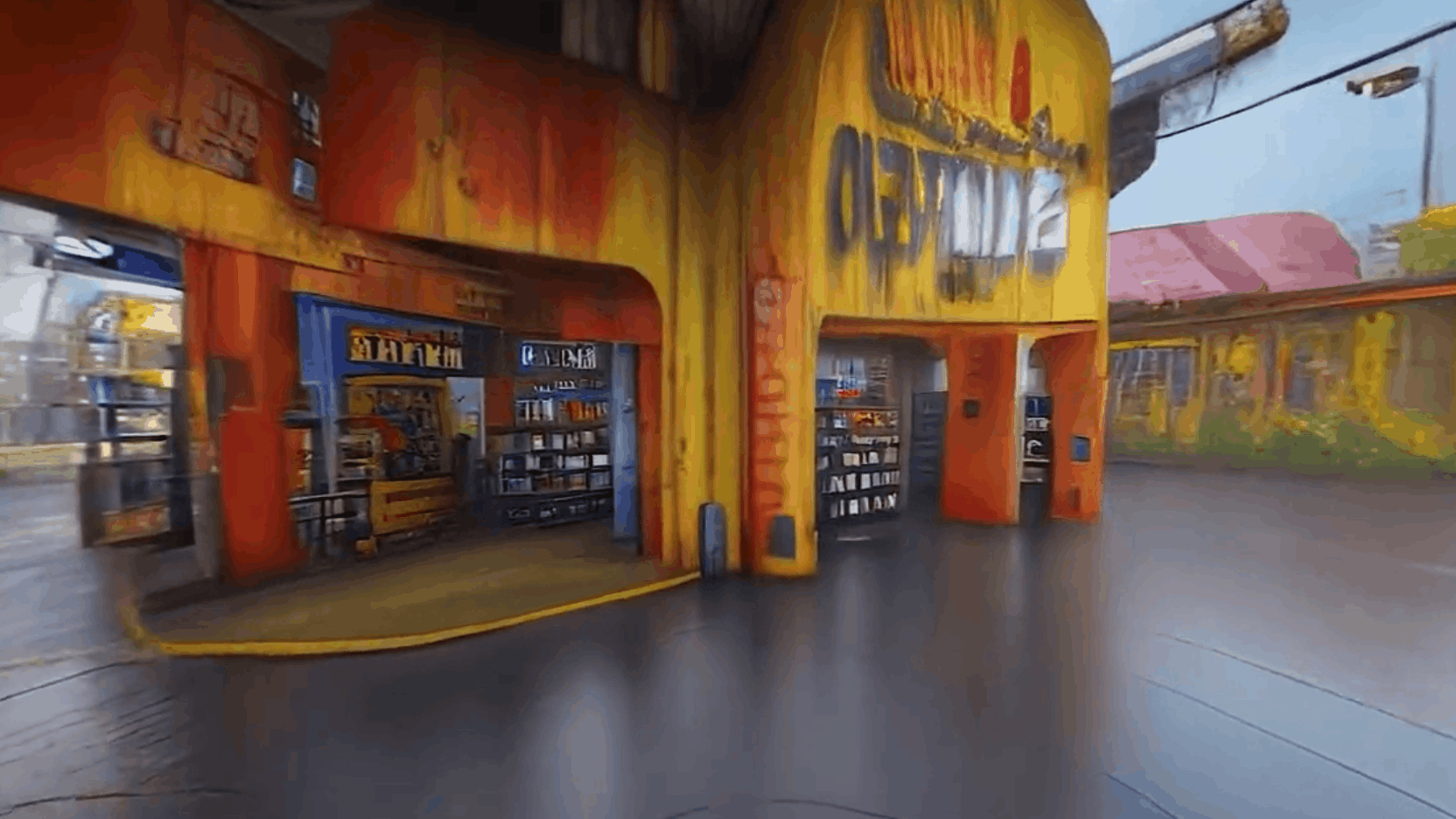

That’s exactly what Scottie Fox has brought to life with Stable Diffusion VR, an immersive experience that brings AI image generation into a 360° space. It does so without the need to rent gigantic servers to process everything, instead relying on consumer PC hardware, and it brings the user right into the middle of a continually shifting dreamworld powered by the Stable Diffusion image generator.

Although it’s a long way from completion, even in the experimentation stage it’s looking fantastic.

I’ve been speaking to Scottie about what it’s like to work on a cutting edge project at the intersection of technology and art. Not only has he given us an insight into the project around how he overcame certain hurdles, and where his inspiration came from, he’s also given a sprinkle of insight into the future of AI image generation when it comes to gaming.

Scottie tells me how the muses decided to strike him in the middle of the night with the inspiration for the project. When asked to elaborate, he speaks all about the awkwardness presented by a night-time eureka moment.

“Unfortunately that ‘light bulb’ over your head, that ‘Eureka’ moment, can happen at any time. For me, I was hitting a brick wall during the late-night conception of this project and powered down my PC and shut off my office lights for the evening,” he says. “After an hour of restless sleep, I literally was awoken with a very simple and promising solution to the battle I fought all day with. In my night attire, I returned to my office, fired everything up and tested my theory in practice. ‘EUREKA!’ indeed. It wasn’t just a passing dream.”

Only recently has consumer grade hardware been able to perform tasks that are as resource intensive as real-time rendering.

Scottie Fox

It’s great to see devs acting on their passing fancies; it’s kind of frightening how many great concepts are likely left by the wayside because people put things like sleep ahead of progress. Thankfully, Scottie isn’t one of those people, and has been experimenting fervently on the project ever since.

When I ask about the iterations his Stable Diffusion VR project had been through he notes that, “Over the years, I’ve focused on the spectacle of ‘real-time visual effects.’ Only recently has consumer grade hardware been able to perform tasks that are as resource intensive as real-time rendering.”

(Image credit: Scottie Fox)

The hardware he’s been using for the development of Stable Diffusion VR includes an RTX 2080 Ti graphics card, backed by an AMD Threadripper 1950X. That’s a lot of computing power, and although you may be shrugging your shoulders at a 20-series graphics card, remember this was not long ago the best GPU money could buy.

All things considered, it’s amazing to see consumer grade hardware handling the high loads that both artificial intelligence and virtual reality entail. As Scottie explains, “Whether it be particle simulation, or motion capture interaction, a lot goes into creating a smooth, vivid, immersion experience.

“One of the biggest challenges I faced in this project was trying to diffuse content (a heavy task) in a seamless, continuous fashion in such a way that immersive environments could be visualised.

That’s exactly what I wanted; to be able to render a 360° environment, completely diffused with iterated content.

“Even with current cloud-based GPU systems, and high-end commercial runtimes, attempting to ‘render’ a single frame takes many seconds or minutes—unacceptable for real-time display. But that’s exactly what I wanted; to be able to render a 360° environment, completely diffused with iterated content. That was the first goal—the very one that I struggled with the most.”

As we’ve seen in many of the project vids, it’s clear Scottie has managed to overcome the hurdle of processing power with flying colours. He’s been able to bring all this fancy software to us without needing to rent out a bunch of server space in order to process everything—something that can get pretty costly.

In order to do this, Scottie had to “break the entire process into small pieces, scheduled to be diffused.” Each of the blue squares you see in the below video is a section being queued up in order to be processed in the background.

Stable Diffusion VRReal-time immersive latent space. ⚡️Adding debug functionality via TouchDesigner.Diffusing small pieces into the environment saves on resources. Tools used:https://t.co/UrbdGfvdRd https://t.co/DnWVFZdppT#aiart #vr #stablediffusionart #touchdesigner pic.twitter.com/TQZGvvA5tHOctober 13, 2022

“These pieces are sampled from the main environmental sphere, and once complete, are queued back into the main view through blending,” Scottie notes. “This saves time by not having to render and diffuse the entire environment at once. The result is a view that seems to slowly evolve, seamlessly, while in the background a lot of processing is taking place.”

It’s a super elegant solution, the effect of which is fascinating and potentially less overwhelming than shifting the entire scene at once. Although this is a much more efficient approach, Scottie’s next steps “revolve around practical use in consumer grade hardware, as well as modular functions for integration with other softwares and applications.”

Speaking of integrations, Scottie talks of his journey in connecting with different developers at summits and the like, something that’s really important for anyone looking to develop projects like this one. In learning about, and testing different approaches other developers have tried, Scottie has been able to form a strong basis for his Stable Diffusion VR project.

“As a creator that has explored many 3D rendering and modeling softwares, I grew hungry for more. I finally arrived at Derivative and their product TouchDesigner. For interactive and art installations, this platform has become an industry standard, among other tools. It has the incredible ability to transliterate between protocols and development languages which helps with integrating the many different formats and styles of parallel software.”

WILD! Working to achieve my goals! Lot’s of test effort today. Stable Diffusion in VR + touchdesigner = realtime immersive latent space. This proof of concept is the FUTURE! #aiart #vr #stablediffusion #touchdesigner #deforum pic.twitter.com/Qn5XWJAO7ZOctober 7, 2022

“The other half of my inspiration comes from Deforum—an incredible community of artists, developers, and supporters of art generated by datasets.” There, he says, “You can share your struggles, celebrate successes, and be inspired in ways that you can’t simply do privately.”

The next biggest challenge for anyone wanting to work with Stable Diffusion comes in the form of copyright issues. “Currently, there’s a lot of licensing and legal aspects of tapping into a dataset and creating art from it. While those licences are public, I don’t have the right to redistribute them as my own.”

It’s a worrying concept, one that’s causing companies like Getty to ban AI generated imagery, though Shutterstock seems to be taking steps in a different direction by looking to pay artists for their contribution to training material for an upcoming AI image generation tool.

(Image credit: Scottie Fox)

Scottie makes it clear that “A lot of testing and publishing would need to take place in order for me to bring it from my own PC in my office, into the hands of those that will really enjoy something more than just a demo.”

I’m sure there will be no shortage of businesses, artists and devs willing to work with Scottie, as he notes “I have been approached by an incredibly diverse amount of development companies that are interested in my project.”

Some of the more wild ones include “a crime scene reconstruction tool where a witness could dictate and ‘build’ their visual recollection to be used as evidence in a court of law. In another case, a therapeutic environment where a trained healthcare provider could custom tailor an experience for their patient based on the treatment they sit fit.”

Lots of potential for the project to go in directions other than gaming, then.

(Image credit: Scottie Fox)

Companies will combine their current technologies with AI content to create a hybrid game genre.

Scottie feels that when working with AI in the future, “successful companies will combine their current technologies with AI content to create a hybrid game genre. They will have the fluidity of their familiar technology with the added functionality of AI assisted environments.” He considers the potential for AI in games to be used in bug testing, in personalised escape room experiences, and in horror or RPG monsters that evolve on a per game basis.

On that terrifying note, I’ll leave you with some inspirational words from the man himself. Should you be considering a project like this, remember, “Sometimes the longest journey occurs between the canvas and the paintbrush.” As an ex art student, I concur.

As well, it’s important to know that “Even the most creative of minds will hit an obstacle,” so keep on developing. And don’t ignore the muses when they strike in the middle of the night.